Choosing the best enterprise AI platform is a strategic decision with far reaching ramification. It goes far beyond simply “choosing an LLM”. It requires the business to account for performance, security, scaling, and a future-proofed stack. We cannot recommend the AWS AI stack highly enough.

In this comprehensive guide to AWS AI, we explain why. We will discuss the diverse AI tools AWS has built for startups and enterprises alike, its approach to security and compliance, and argue that is the right choice for building and scaling AI.

Why enterprises are racing to adopt AI?

With the wave of AI sweeping the world, teams are under pressure to deliver smart solutions, automate workflows and gain sharper customer insights as quickly as they can.

However, implementing AI at enterprise scale the right way is far from simple. Real-world implementations introduce complexity like regulatory hurdles, privacy concerns and tight security requirements. A misstep in deploying AI at the enterprise level can result in loss of customer trust or major fines. That’s why enterprise leaders turn to platforms to empower their software solutions with Gen AI on AWS while proactively managing new risks in privacy, compliance, bias and more.

To succeed, you need a platform built from the ground up for scale, security, and flexibility. This is where AWS leads in enterprise AI.

Why enterprises need a scalable AI foundation?

Running an experiment with a small AI model or demo is easy. The true test begins when leadership expects those pilots to serve thousands, or even millions, across multiple regions while complying with strict regulations. This is where most enterprises hit a wall: turning a quick win into an enterprise-ready AI production pipeline.

A scalable AI foundation is essential. It provides structure and reliability, allows rapid growth, and safeguards systems, budgets, and customer trust. Scalability ensures your AWS AI models can process more data and serve more users without faltering. Reliability matters because downtime, even for an hour, can cost millions. Security and compliance are integral, especially in regulated industries where AI solutions must meet strict data privacy and audit standards.

What’s new for enterprise AI on AWS in 2026?

AWS re:Invent 2025 introduced a wave of Gen AI and agentic AI capabilities that strengthen AWS’s position as the default platform for serious enterprise AI. These updates focus on three themes that matter most to enterprises: more capable and cost-efficient models, production-grade agent tooling and faster paths from prototype to large-scale deployment.

Smarter, more efficient Nova 2 models for enterprise workloads

AWS expanded the Amazon Nova family with new frontier models that target real-world enterprise needs such as reasoning, multimodal understanding and speech. These models are designed to offer strong performance at a lower cost profile than many closed alternatives, making it easier to scale use cases from pilot to thousands or millions of users.

A key highlight is the next generation of Nova 2 models optimized for multimodal and conversational workloads, as well as specialized variants for speech-to-speech and automation scenarios. Together, they give enterprises more flexibility to match cost, latency, and capability to each use case, from knowledge assistants to complex workflow automation.

Check out our guide to Nova 2 models.

Nova Forge: custom frontier models without starting from scratch

With Nova Forge, AWS now lets enterprises build customized frontier models on top of pre-trained Nova checkpoints, combining Amazon’s training data with each customer’s own proprietary corpus. This approach helps organizations in highly regulated industries get domain-specific models that still benefit from frontier-level capabilities, while avoiding the time and cost of training entirely from scratch.

Because Nova Forge runs on Amazon Bedrock, enterprises can keep customization and inference within a governed, auditable AWS environment, which aligns well with GoML’s focus on compliant GenAI deployments in sectors like healthcare, financial services, and life sciences.

Check out our guide to Nova Forge.

Agentic AI at scale with Amazon Bedrock AgentCore

Agentic AI moved from experimentation to production at re:Invent 2025, with major enhancements to Amazon Bedrock AgentCore. New capabilities such as policy controls, built-in evaluations, and richer memory make it easier to run AI agents safely in real enterprise workflows. To explore concrete patterns like approval-gated agents, multi-agent workflows and tool-calling on AWS, read GoML’s deep dive on Amazon Bedrock AgentCore.

Policy lets teams define natural-language guardrails around what agents can and cannot do, while prebuilt and custom evaluations provide continuous, structured feedback on correctness, safety, and reliability. Enhanced memory support helps agents maintain context across sessions, which is critical for use cases like customer service, underwriting, and clinical support that GoML delivers on AWS.

Faster model customization with Amazon SageMaker AI and Bedrock RFT

AWS also announced new ways to adapt foundation models faster. Reinforcement Fine-Tuning (RFT) in Amazon Bedrock uses real-world feedback signals to improve model behavior, with AWS citing average accuracy gains of around two-thirds compared to base models in early customer scenarios. This approach reduces the need for large labeled datasets and allows enterprises to improve models iteratively based on how employees and customers actually use them.

In parallel, Amazon SageMaker AI introduced serverless model customization workflows that compress tuning cycles from months to days. Enterprises can describe their customization goals at a higher level, with SageMaker handling orchestration, scaling, and infrastructure, which fits naturally with GoML’s 8‑week pilot and production-readiness motion on AWS.

Trainium3 and HyperPod: efficient training for large models

For organizations training or adapting large models, AWS unveiled Trainium3 UltraServers and improvements to the SageMaker HyperPod training environment. Trainium3-based systems deliver multiple times more compute performance and significantly better energy efficiency than the previous generation, reducing both training time and operational cost for large-scale GenAI workloads.

HyperPod adds fault-tolerance improvements that keep large distributed training jobs running with high cluster efficiency, even when individual nodes fail. This is especially relevant for enterprises and partners like GoML that are pushing into larger custom or domain-adapted models on AWS while needing predictable timelines and budgets.

AI Factories: AWS AI infrastructure in your own data center

To support customers with strict data residency or sovereignty requirements, AWS introduced AI Factories—fully managed AI infrastructure that can be deployed in customer or partner data centers while still exposing familiar AWS services and APIs. This model gives regulated enterprises a way to run GenAI closer to their most sensitive data without losing the operational benefits of managed AWS services.

The AWS gen AI ecosystem for enterprises

AWS brings everything an enterprise needs for AWS AI together, delivering reliable, production-grade building blocks. Here’s how these AWS Gen AI tools fit together and why each deserves its own deep-dive:

- Amazon Bedrock: The simplest way to start using cutting-edge AI models right from the AWS platform. Bedrock gives enterprises flexible access to the best of the AI world, making generative AI adoption seamless. Learn more about why Bedrock is the right choice for enterprise AI.

- Bedrock AgentCore: A comprehensive toolkit for designing, building, and securely managing smart AI agents. It allows businesses to automate processes and enhance customer interactions leveraging AWS AI capabilities.

- Nova 2 Model Family: AWS’s own family of large language and multimodal models, designed for enterprise workloads with a focus on reliability, controllability, and cost efficiency. Nova 2 variants cover everything from fast, lightweight task assistants to advanced multimodal and speech‑to‑speech experiences, giving you a consistent model family across knowledge assistants, document automation, and conversational interfaces. Our Nova 2 guide shows how to choose the right Nova variant for each scenario.

- Amazon SageMaker: End-to-end support for building, training, and deploying custom AI and machine learning models at scale. SageMaker empowers data scientists and ML engineers to manage full model lifecycles, a foundational component of any AWS AI strategy. Learn more developing machine learning models on SageMaker.

- AWS Lambda: Event-driven compute lets you run code for AI jobs only when needed, thereby maximizing efficiency and minimizing costs. Lambda acts as the connective tissue within AI architectures for your enterprise.

- Strands SDK: The go-to development kit for seamlessly integrating AI models into enterprise applications, accelerating AI adoption without re-architecting core systems.

- Third-party LLMs in Bedrock: Use leading models from Anthropic, Meta, Cohere, Amazon Titan and others, right inside your AWS AI environment. This flexibility lets you experiment freely and future-proof your investments.

Following AWS re:Invent 2025, Bedrock’s catalog now includes an expanded family of Amazon Nova models and additional leading third‑party options, giving enterprises even more choice in how they balance cost, latency, and capability for each use case.

If you’re looking for a broader overview of the AWS landscape, we’ve broken down the Top 15 AWS machine learning tools. All these tools support scale, security, and choice. AWS AI customers can mix and match what’s best for each scenario.

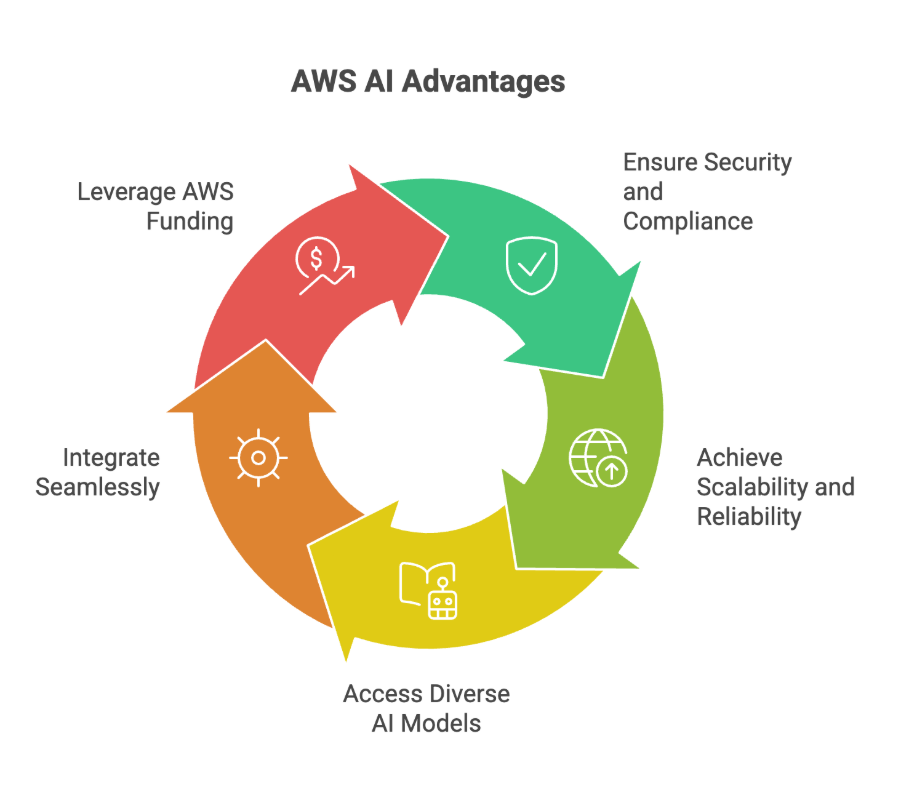

The AWS AI advantage for enterprise AI

AWS’s building blocks offer enterprises more than a toolkit. They deliver the backbone for secure, scalable AI operations.

Security and compliance

Security comes standard on AWS. Data is encrypted at rest and in transit. AWS provides tools like Identity and Access Management (IAM), which lets companies control exactly who accesses what. With compliance certifications (GDPR, HIPAA, SOC 2, and more), businesses can meet industry standards with less effort. For regulated sectors, AWS already checks many boxes for audits and data residency. For a deeper look into best practices, see our blog on Securing AI Models on AWS: Best Practices and Strategies.

Scalability and reliability

AWS is built for enterprises that can’t afford downtime. Zones are spread worldwide, so workloads stay up, even in the event of a regional outage. This lets you grow from a POC to serving millions without changing your stack. Auto-scaling and managed services mean less time worrying about infrastructure.

AWS announced a slew of updates at AWS re:Invent 2025, ranging from Nova Forge to Trainium3-powered infrastructure and AI Factories. All these deepen this backbone by combining higher performance with stronger governance and deployment flexibility. Capabilities like Nova Forge’s responsible AI toolkit and Bedrock AgentCore’s policy and evaluation features further help security and risk teams encode organizational rules directly into Gen AI systems instead of relying only on ad-hoc prompts.

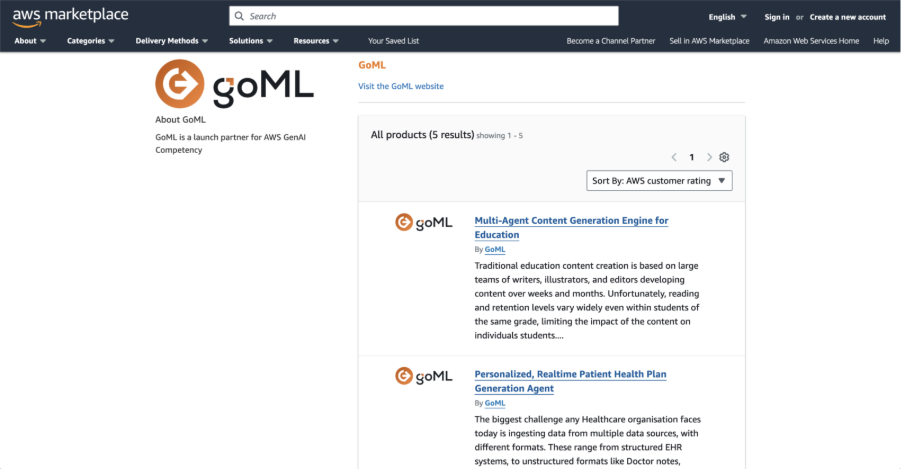

Enjoy a broad choice of models with the AWS Marketplace

AWS’s Bedrock Marketplace brings together leading AI models from top providers, like Anthropic, Cohere, Stability AI, AI21 Labs and more. There’s no need to pick just one model or wait for years for new releases. Mix and match best-in-class models to get the right fit for each use case. Enterprises that choose AWS avoid vendor lock-in and can experiment freely as new models emerge. The expanded Nova family and the 2025 Bedrock model additions deepen this neutral marketplace, giving enterprises even more latitude to swap in the best-performing model for each enterprise use case without architectural rework.

Seamless integration with enterprise tools

AWS connects easily with the tools most companies already use. Right from databases, analytics platforms, security suites, all the way to workflow automation systems. APIs and managed services let engineers jump straight into building AI features instead of wrestling with low-level integration. GoML helps companies activate these integrations quickly, making AI adoption simpler for teams at any stage.

The latest AgentCore updates from re:Invent 2025 let GoML turn these integrations into full agentic workflows - where AI systems not only answer questions but also take bounded actions across enterprise tools under clear policy and governance. If you’re looking for a structured way to design and deploy enterprise-ready agents on AWS, see GoML’s blog on a practical framework for AI agents in the workplace.

Boost your business case with AWS funding

Many GoML accelerator engagements qualify for targeted AWS funding at every project phase, all the way from initial use-case discovery to POC, pilot programs to enterprise-wide rollout. These AWS-backed incentives let you innovate confidently and reduce the financial friction of scaling AI.

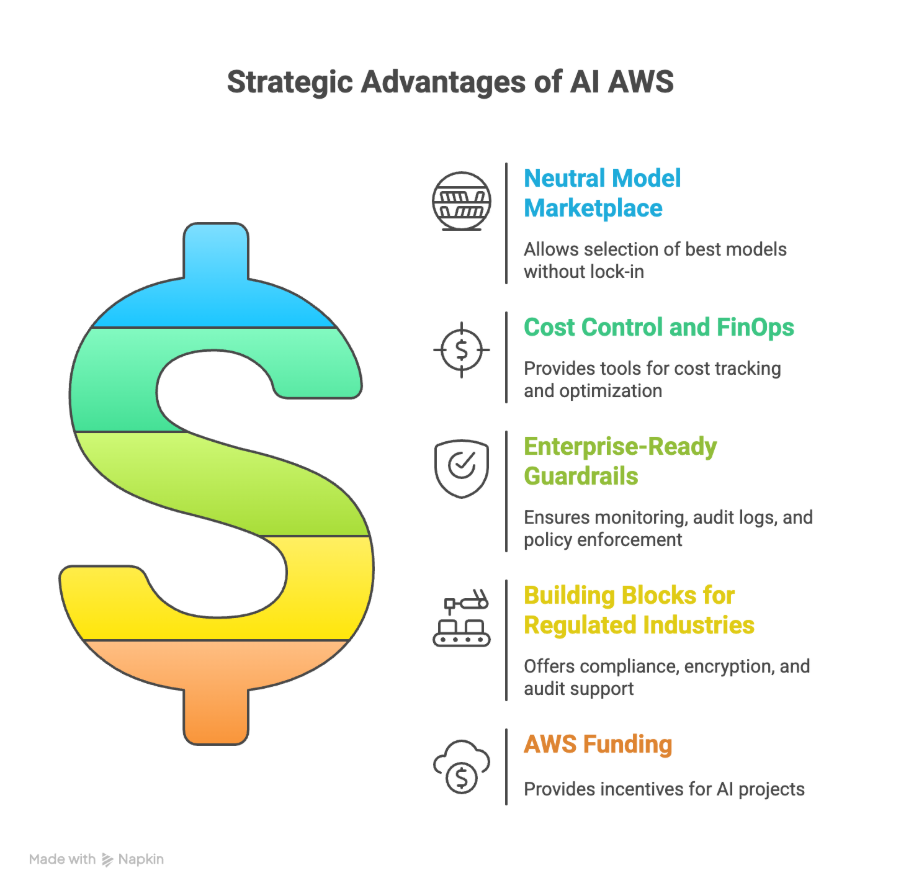

Why enterprises prefer AWS AI?

The AWS ecosystem is designed to offers enterprises strategic control over their AI future.

Neutral model marketplace

Unlike some platforms, AWS AI lets you pick the best model for each use case. This is possible along with no lock-in or forced migrations.

Cost control and FinOps

Finance teams control AWS AI costs using native AWS spend-tracking and optimization tools. Reserved instances, spot pricing, and budgeting automation via official partners like GoML ensure you get more for less.

Enterprise-ready guardrails and governance

Production-ready AWS AI solutions require guardrails for monitoring, audit logs, and policy enforcement. AWS delivers with services like Config, CloudTrail and GuardDuty, while partners like GoML help overlay company-wide governance and compliance protections. CloudWatch enables monitoring to agentic AI. Our guide to CloudWatch for agentic AI observability dives into how to monitor prompts, tool calls, and agent behavior on AWS like any other production workload.

Building blocks for regulated industries

Industries like finance, healthcare and government trust AWS AI for compliance, data encryption, localization, and audit support. Templates and managed services help legal teams stay ahead as requirements evolve. GoML’s deep domain partnerships further accelerate regulated deployments.

For teams exploring custom frontier models that still run in a governed AWS environment, Nova Forge offers the cheapest way to differentiate your models with custom training. Our Nova Forge guide outlines how to design domain-specific models for finance, healthcare, and life sciences.

Accelerate operations with AWS Funding

By partnering with GoML, enterprises are eligible for substantial AWS funding, which includes discovery workshops, proof-of-concept pilots, and full production deployments. If you’re exploring Gen AI, ask us how AWS-funded incentives can offset your investment and help you move faster from idea to impact.

AWS AI funding opportunities with GoML

Scaling with Generative AI requires a clear, well-funded path to execution. AWS offers a suite of funding programs tailored to every stage of the Gen AI lifecycle, helping enterprises de-risk adoption, accelerate impact, and optimize costs. As an AWS Gen AI Competency Partner, GoML’s proven frameworks maximize your access to these incentives, turning complex initiatives into production results faster.

Key AWS funding programs

1. Assessment and discovery

Kickstart your Gen AI journey with up to $10,000 in AWS funding covering discovery workshops and roadmap design. This enables structured use-case alignment and executive buy-in with minimal upfront cost.

2. Proof of Concept (POC)

Secure up to $250,000 in funding (up to 40% of Year-1 ARR) for 4-week POC engagements. This phase is designed for rapid demonstration of value, model evaluations, and hands-on validation—eliminating internal inertia and enabling faster go/no-go decisions.

3. Pilot deployment

When it’s time to scale, AWS supports larger pilots with incremental funding (up to $250,000), ideal for iterative testing and ensuring smooth transitions from POC to production. GoML’s 8-week pilots cover LLMOps best practices for enterprise readiness.

4. Production rollout

Move to full deployment with production-ready funding (also up to $250,000), supporting robust, scalable, and compliant Gen AI applications. This covers architecture hardening, integration, and launch support ensuring enterprise-wide adoption.

Why partner with GoML for AWS Funding?

- Strategic expertise: GoML’s AI Matic framework and “LLM Boilerplates” make the most of AWS’s tiered funding, aligning each activity with the highest ROI and rapid application.

- End-to-end support: From initial discovery to production, GoML navigates every aspect of the process, ensuring proof, pilots, and launches all maximize eligible AWS funds.

- Future-proof approach: Our enterprise-grade integration, compliance focus, and deep AWS partnership mean you can scale Gen AI projects. No vendor lock-in or budget surprises.

For an in-depth breakdown of available programs, eligible use cases, funding workflow, and actionable strategies for both startups and enterprises, download our complete AWS Funding Programs for Gen AI eBook.

Choosing the right AWS AI and ML tools for your use case

Different business challenges require specialized approaches. AWS's modular platform lets you select purpose-built AWS AI tools, whether it’s SageMaker for custom training, Bedrock for generative models, or Lambda for event-driven workflows - to fit every scenario. Evaluating needs and matching the right tool is critical for AWS AI success.

Benefits of AWS AI and Machine Learning tools for enterprises

AWS’s robust suite of machine learning tools enables rapid AI innovation from Amazon SageMaker's model lifecycle management to Amazon Bedrock’s third-party model access. This wide array of tools form the basis for flexible, scalable and secure AWS AI deployments across almost every use case.

Our blog on AWS AI offerings for enterprises highlights some of the latest AWS AI tools that institutions are adopting.

AWS AI in action across industries

Finance

Enterprises rely on AWS AI to power fraud detection, transaction monitoring, and intelligent underwriting, all while meeting strict audit and performance standards. For example, GoML built an AI-powered transaction monitoring tool for Miden on AWS to detect anomalies in real time and reduce fraud risk. Similarly, GoML developed a conversational AI chatbot for Ledgebrook underwriting, allowing underwriters to query policies and documents instantly, cutting retrieval time by 70% and automating classification with 90% accuracy.

With the new Nova and Bedrock AgentCore capabilities, similar underwriting and fraud workflows can now be powered by agentic systems that reason over long histories and execute end-to-end tasks, while still operating inside a compliant AWS environment.

Healthcare

AI is enabling smarter patient care in a significant way. GoML deployed a generative AI copilot for Max Healthcare’s longitudinal patient data, built on Amazon Bedrock, that lets clinicians pull longitudinal patient insights through natural language queries, bringing real-time decision support into care delivery while meeting HIPAA-grade compliance.

2026 innovations like Nova 2 Omni and on-prem AI Factories further strengthen this pattern, enabling GoML to deliver clinical copilots that combine rich longitudinal context with strict data residency and compliance controls.

Life Sciences and Pharma

Strict compliance and audit demands make pharma a natural fit for AI. GoML’s work with SagaxTeam demonstrates this. They built a pharma AI compliance agent for automated audits, which delivered 80% faster audit speeds, 70% automation in prep and review, and 50% fewer documentation errors, all while running securely on AWS.

With Nova Forge and Bedrock Reinforcement Fine-Tuning introduced at re:Invent 2025, GoML can now shape pharma-grade frontier models around specific regulatory frameworks and document workflows, improving audit accuracy while reducing manual review effort even further. GoML’s Nova 2 Omni deep dive explains how multimodal Nova models can reason over text, documents and visual data in a single clinical or compliance workflow on AWS.

Future-proof your enterprise AI strategy with AWS AI

With rapid change in AI models, regulations and best practices, AWS keeps organizations ready for tomorrow. It's evolving model marketplace, managed services, and strict compliance support ensure you’ll never be left behind. Adopting modern MLOps, prompt engineering, and guardrails is frictionless within an AWS architecture.

GoML works with AWS to speed up deployments and keep your organization production-ready as the gen AI market evolves.

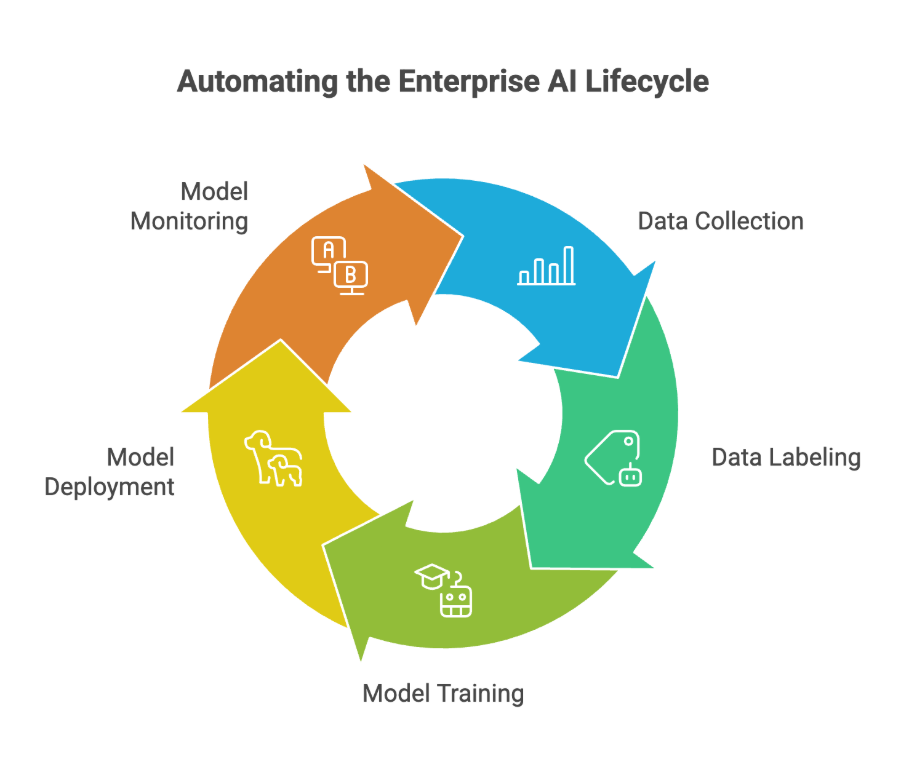

Automating the enterprise AI lifecycle with AWS AI

From data collection and labelling to model training, deployment, and monitoring, AWS supports automation at every step of the AI lifecycle. Services like SageMaker Pipelines, AWS Lambda and CloudWatch empower organizations to streamline processes, reduce manual errors and enable real-time updates, ensuring enterprise-grade AWS AI solutions stay agile as business needs shift.

The future of enterprise AI on AWS

Explore how AWS AI’s growing partner ecosystem, ongoing model innovation, and expanding governance capabilities make AWS the safest bet for businesses planning for an AI-powered future.

How to get started with AWS AI?

There are several ways to launch your AWS AI journey:

- Using existing models: Explore pre-built generative models in Bedrock, delivering quick wins for common business needs.

- Customize AWS AI models: Fine-tune models using your own data with SageMaker and Strands SDK.

- Build your own: For unique requirements, develop new models using the full AWS AI toolkit.

- Connect to other tools: Use AWS Lambda or Strands SDK to develop full-scale apps, end-to-end automations and enterprise workflows.

For organizations accelerating this journey, AWS Gen AI Competency Partners like GoML offer expert guidance for AI design, deployment, and scaling.

Need a springboard? Check out the AWS Marketplace for pre-built AWS AI models, tools, and turnkey solutions from leading partners. This can reduce deployment cycles and let your teams focus on value creation.

AWS delivers everything enterprises need for successful AWS AI adoption: secure, global scale, flexible and neutral model choices, robust governance, and seamless integration.

With a vibrant model marketplace and enterprise-grade compliance, AWS stands out as the best platform for today and tomorrow. Furthermore, leading gen AI development companies like GoML help enterprises unlock AWS’s full potential, making AWS AI projects production-ready, cost-controlled and future-proof from day one.

FAQ: Enterprise AI on AWS

Q1: Is AWS more secure than other cloud providers for enterprise AI?

AWS holds top certifications and offers enterprise-grade encryption, access control, and continuous monitoring. For most regulated enterprises, AWS is as secure or more secure than competitors.

Q2: Can I use different AI models on AWS, or am I stuck with just one?

You aren’t locked in. AWS gives you access to a wide marketplace of models from leading providers, plus its own services.

Q3: How does AWS help with AI costs?

You get precise tools to track and control spending by project, model, or user. Reserved pricing, spot instances, and usage dashboards make cost management easier.

Q4: Can I deploy fine-tuned open-source models like Llama 2 on AWS?

Absolutely. AWS provides scalable infrastructure and managed services that make it seamless to deploy fine-tuned models. You can explore a detailed walkthrough in our guide on deploying Llama 2 on AWS.

Q5: What makes AWS different from Azure or GCP for enterprise AI?

AWS stands out for its neutral model marketplace, enterprise-grade compliance, deep integration capabilities, and the largest catalog of third-party models to suit any use case.