Large Language Models (LLMs) have moved beyond the lab; they’re now powering real-world change.

In 2025, every kind of business, from fast-moving startups to global enterprises, is trying to turn AI’s potential into real, measurable outcomes.

This guide helps you make sense of it all. It breaks down what LLMs are, which models are leading the way, and how practical LLM use cases are transforming industries, job roles, and workflows.

This guide cuts through noise. Discover what LLMs truly are, what they can do today, and how the right use cases turn potential into performance.

What can LLMs do well?

As 2025 comes to an end, large language models have evolved from promising experiments into practical business enablers. No longer confined to chatbots or content tools, they now sit at the core of enterprise operations, powering automation, analysis, and customer engagement through real-world LLM use cases.

Here’s a look at the capabilities driving successful LLM use cases and defining the next generation of intelligent systems:

- Natural language understanding: Modern LLMs interpret complex instructions, tone, and context, making AI interactions more intuitive and human-like.

- Content creation and enhancement: From marketing assets to policy documents, they generate clear, audience-ready content while maintaining brand and factual consistency.

- Summarization and knowledge extraction: LLMs can analyze large volumes of data and distill key takeaways, turning information overload into actionable insight.

- Conversational intelligence: They power responsive chatbots and digital assistants that maintain natural, context-aware conversations across industries.

- Multilingual translation: With near-human fluency across languages, LLMs remove barriers in global communication and localization efforts.

- Information retrieval and synthesis: Beyond searching, LLMs compile, compare, and explain data, offering clear summaries for decision-makers.

- Reasoning and scenario planning: Advanced models can simulate outcomes, support strategy discussions, and assist in structured problem-solving.

- Multimodal interaction: 2025 saw a major leap: models now understand and generate text, images, audio, and visuals, expanding LLM use cases beyond written content.

- Personalization at scale: They tailor outputs based on user profiles, tone preferences, and objectives, enabling AI that feels genuinely customized.

- Long-context awareness: With expanded memory and context windows, LLMs can track information across extended conversations and multi-document inputs.

Leading LLMs in 2025

Below are some of the standout models in 2025 (publicly known) and what makes them interesting for users.

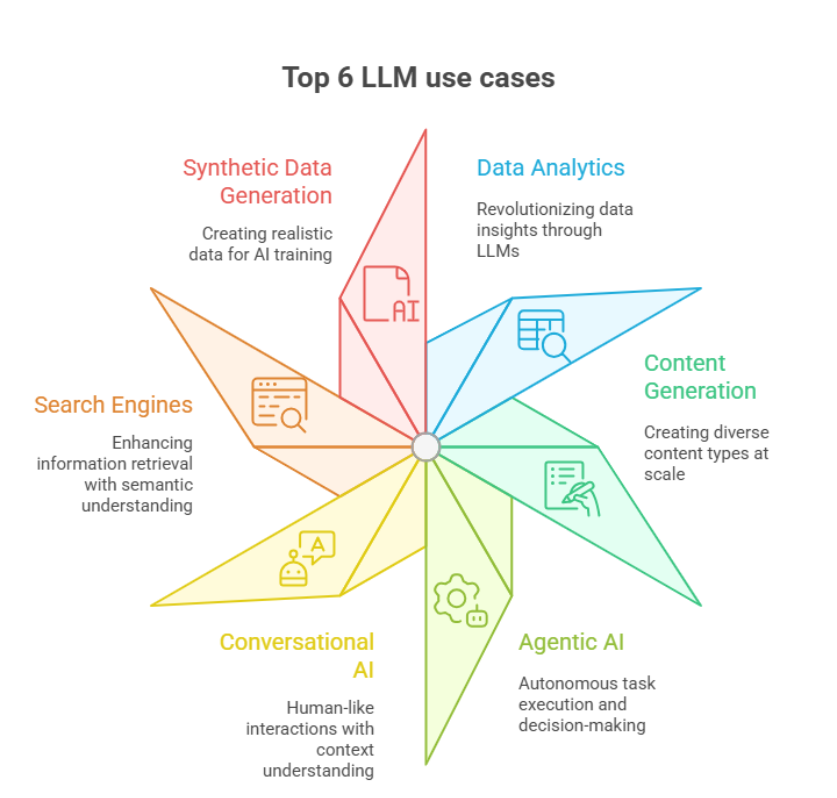

Top 6 LLM Use Cases

Large Language Models are transforming enterprise operations across industries, with 67% of organizations worldwide already adopting these technologies to enhance their operations and achieve competitive advantages.

The following six use cases represent the most impactful applications of LLMs in modern business environments.

LLM use case 1: Data Analytics

Large Language Models have revolutionized how organizations analyze and derive insights from their data. Unlike traditional analytics tools, LLMs process massive datasets from hundreds of sources simultaneously, vendor data across supply chains, patient records spanning healthcare systems, or financial transactions across enterprise databases.

Key Capabilities:

- Multi-format data processing: Work seamlessly with structured data (SQL databases, spreadsheets), unstructured data (clinical notes, emails, reports), and semi-structured data (JSON logs, XML files), all within a single workflow

- Cross-database querying: Query across different database types, relational (MySQL, PostgreSQL), NoSQL (MongoDB, Cassandra), data warehouses (Snowflake, Redshift), or graph databases, without complex ETL pipelines

- Natural language to SQL: Translate human language to SQL, business users ask "What were our top-selling products in Q3?" and LLMs automatically generate optimized queries and present results, no technical expertise required

- Domain-specific intelligence: Understand domain-specific data, medical terminology in healthcare, financial instruments in banking, materials specifications in manufacturing, grasping context and business rules that make insights actionable

Real-world applications

1. Manufacturing and supply chain optimization: Steel manufacturers face challenges in sourcing and raw materials planning due to massive data across hundreds of vendors and live vessel reports. Our NLP-powered supply chain analytics for Arcelor Mittal Nippon Steel enabled real-time insights from 230+ vendors globally, reducing planning effort by 65% and improving accuracy by 60%, resulting in 80% faster decision-making in steel manufacturing operations.

2. Healthcare data intelligence: Healthcare providers struggle to analyze patient data from labs, diagnostics, and clinical notes, causing delays in medical decision-making. Our AI-powered patient data analytics for Atria Healthcare automates this analysis, reducing time by 55% and enabling real-time risk detection by synthesizing fragmented patient information into actionable clinical insights.

3. Financial planning and analysis: Global enterprises often struggle to make financial planning faster and more data-driven across distributed operations. Our financial analysis AI copilot built for Bosch automates financial data interpretation, scenario modeling, and insight generation through natural language queries. By connecting to multiple data sources and transforming raw financial data into actionable intelligence, Bosch achieved a 60% improvement in decision-making efficiency, empowering executives to make informed, timely, and confident financial decisions.

LLM use case 2: Content Generation

LLMs have transformed content creation by generating high-quality, contextually relevant text at scale. Unlike traditional content tools, LLMs can produce diverse content types simultaneously, clinical reports, marketing materials, technical documentation, and educational content, each following specific templates and brand guidelines.

Key Capabilities:

- Multi-format content generation: Generate content from structured data (patient records, product catalogs), unstructured inputs (brand guidelines, reference materials), and semi-structured sources (form data, metadata), synthesizing information into cohesive narratives

- Cross-platform content creation: Work across multiple content repositories, document management systems, CMS platforms, knowledge bases, and cloud storage, pulling information to create unified, consistent content

- Natural language content requests: Enable natural language instructions, users say "Create a patient discharge summary" or "Generate product descriptions for our fall collection," and LLMs produce publication-ready content

- Domain-specific content expertise: Understand domain-specific requirements, medical compliance in healthcare documentation, brand voice in marketing, technical accuracy in industrial content, age-appropriate language in educational materials

Real-world applications

1. Healthcare documentation: Healthcare providers struggle to generate timely patient reports and clinical summaries. Our Gen AI-powered content generation for Atria Healthcare automates creation from lab tests, clinical notes, and diagnostics, reducing analysis time by 55% and enabling faster decision-making by generating structured, actionable content from unstructured healthcare data, with seamless integration into tools like Google Docs and Slack.

2. Personalized marketing content: Marketing teams struggle to create localized and personalized campaigns at scale. Our AI-driven marketing content generation for Solarplexus automates the creation of hyper-personalized images and text, boosting campaign ROI by 60% while cutting creative operations effort by 32%. The solution enables targeted, high-performing campaigns that achieve a 10× improvement in conversions through data-driven personalization and automated content delivery.

3. Educational content development: Educational content creation can be time-intensive and resource-heavy. Our AI-powered interactive storytelling for Ojjee streamlines interactive book creation, reducing production time by 30% while enhancing storytelling, visual creativity, engagement, and learning retention through rich narratives and multimedia integration.

LLM use case 3: Agentic AI

Agentic AI represents LLMs that reason, plan, and execute complex tasks autonomously. Unlike simple chatbots, AI agents make independent decisions, orchestrate multi-step workflows, and adapt to changing contexts in real-time.

Key Capabilities:

- Multi-format data processing: Process structured operational data (work orders, quality metrics), unstructured information (safety standards, technical manuals), and semi-structured inputs (API responses, sensor data), making informed decisions across information types

- Cross-system integration: Integrate with multiple system types, ERP platforms, IoT networks, document repositories, and workflow management systems, coordinating actions without manual intervention

- Natural language agent instructions: Operate via natural language, users say "Process incoming work orders and flag safety issues" or "Monitor production quality and alert on anomalies," and agents execute autonomously

- Domain-specific decision-making: Understand domain-specific contexts, clinical protocols in healthcare, safety regulations in manufacturing, compliance requirements in utilities, quality standards in industrial operations

Real-world applications

1. Real estate listing automation: Real estate platforms struggle with manual property listing validation, image quality issues, and slow review processes. Our AI-driven agentic system for property listing automation at Property Finder automates property image validation across 44 parameters, including quality improvements, watermark removal, and metadata extraction, cutting review time by 75%, eliminating 85% of low-quality images, making listings 99% faster, and increasing revenue by 4% with reduced rejection rates through autonomous multi-modal content generation and validation.

2. Industrial quality control: Manufacturers often struggle to pull critical specs from unstructured PDFs, slowing engineering workflows and introducing errors. Our AI agents for automated PDF data extraction at Podium Automation extract component specifications (amperage, voltage, and connection types) via ML + LLM pipelines, converting them into structured JSON with 85%+ accuracy and 80% faster throughput. The solution slashes manual effort, reduces errors, and accelerates design cycles.

3. Field operations management: Power companies often struggle under heavy manual load when processing field operations and maintenance work orders. Our Gen AI super agents for work order automation at Dairyland Power transform these workflows by analyzing, enriching, and routing tasks, cross-referencing internal systems and safety rules. The result: a 70% reduction in manual processing time, full compliance enforcement, and faster, safer field operations.

LLM use case 4: Conversational AI

Conversational AI powered by LLMs creates human-like interactions that understand context, intent, and emotion. Unlike scripted chatbots, these systems offer adaptive, intelligent conversations that feel natural and productive.

Key Capabilities:

- Multi-format data understanding: Work with structured data (employee records, property listings, ticket systems), unstructured content (support documentation, psychometric assessments, company policies), and semi-structured information (chat histories, interaction logs), providing contextually relevant responses

- Cross-platform connectivity: Connect to various platforms, CRM systems, HR databases, cloud infrastructure, scheduling tools, delivering unified conversational experiences

- Natural conversation interface: Enable natural dialogue; users say "Help me resolve this AWS error" or "Find properties matching my criteria," and the AI understands intent, retrieves information, and responds appropriately

- Domain-specific conversational expertise: Understand domain expertise, HR coaching dynamics, real estate requirements, technical troubleshooting steps, customer service protocols

Real-world applications

1. Personalized HR coaching: HR platforms struggle with personalized coaching content for employees. Our AI-powered personal coaching system for SurePeople delivers real-time, customized feedback and mental wellness guidance, reducing content creation time by 55% and boosting engagement by 60% through psychometric data-driven, adaptive interactions that maximize productivity and personal growth.

2. Real estate sales automation: Real estate operations often struggle with slow response times and low lead conversion due to manual inquiry handling. Our AI-driven leasing assistant at Unity AI automates property inquiries and scheduling, reducing response time by 42% and boosting lead conversion by 5× through context-rich dialogue and automated high-touch sales interactions.

3. Technical support automation:

Financial services often face delays and inefficiencies in manual transaction monitoring, increasing the risk of fraud. Our Gen AI-powered transaction monitoring tool at Miden automates real-time anomaly detection across 50+ transaction types, reducing fraud detection time by 82%, improving transaction processing scalability by 75%, and cutting manual monitoring effort by 67%. This solution enables proactive fraud detection and enhances operational efficiency in banking transactions.

LLM use case 5: Search Engines

LLM-powered search engines transform information retrieval by understanding user intent, context, and semantic meaning rather than just matching keywords. Unlike traditional search, these systems provide conversational search experiences and synthesize information from multiple sources.

Key Capabilities:

- Multi-format indexing: Index structured data (product catalogs, financial transactions), unstructured content (documents, technical manuals, images), and semi-structured information (metadata, tags, annotations), delivering comprehensive results

- Cross-system search: Query across diverse systems, eCommerce databases, document repositories, knowledge graphs, financial databases, providing unified search experiences

- Natural language search queries: Enable natural language search, users say "Show my recurring expenses over $100" or "Find properties with outdoor space near downtown," and search engines interpret intent, retrieve relevant results, and synthesize answers

- Domain-specific search intelligence: Understand domain context, product attributes in retail, financial patterns in banking, property characteristics in real estate, technical specifications in enterprise search

Real-world applications

1. eCommerce product discovery: eCommerce platforms often struggle with generic search results and low personalization, leading to poor user engagement. Our AI-driven search and recommendation engine for Techatomic leverages semantic understanding and behavioral cues to deliver highly relevant, persona-based results that prioritize user intent. This approach has led to a 40% increase in session-to-click conversions, a 25% reduction in search abandonment, and a 20% faster product discovery time, all while ensuring ethical, unbiased, and scalable AI-powered search experiences.

2. Enterprise knowledge management: Organizations often struggle to manage vast document repositories and retrieve contextually relevant insights. Our LLM-based search and knowledge graph solution for Tungsten Automation enables users to query unstructured data from complex documentation formats (PDFs, HTML, DITA) using natural language. This approach improves information retrieval accuracy by 85%, reduces search time by 70%, and boosts certification exam preparedness scores by 60%, all through semantic understanding and context-aware responses.

3. Financial data navigation: Users managing multiple accounts and transactions need simplified financial data navigation. Our AI-enhanced financial search tool for GetAsset leverages Claude 3.5 on AWS Bedrock to classify spending patterns and interpret conversational queries like "show my top 5 recurring expenses." This solution improves classification accuracy by 20%, reduces API costs by 30%, and bridges natural language with structured financial data for intuitive exploration and decision support.

LLM use case 6: Synthetic Data Generation

LLMs generate realistic synthetic data that mirrors real-world patterns without exposing sensitive information. Unlike traditional data masking, synthetic data creation enables AI model training, system testing, and application development while maintaining privacy and compliance.

Key Capabilities:

- Multi-format synthetic generation: Create structured synthetic data (database records, transaction logs), unstructured content (clinical notes, customer conversations), and semi-structured formats (IoT device responses, API payloads), maintaining statistical properties of real data

- Cross-source simulation: Simulate data across various sources, patient management systems, IoT networks, customer service platforms, workforce analytics, without accessing production systems

- Natural language data specifications: Request synthetic data through natural language, organizations say "Generate 10,000 patient records with cardiovascular conditions" or "Create IoT device failure scenarios," and LLMs produce realistic datasets

- Domain-specific data fidelity: Preserve domain characteristics, medical coherence in healthcare records, behavioral patterns in customer data, device physics in IoT simulations, regulatory compliance in financial transactions

Real-world applications

1. IoT testing automation: IoT test automation companies face challenges in validating connected devices at scale with dependency on real hardware. Our LLM-powered synthetic data generation for IoT simulation at Doppelio creates realistic, context-rich synthetic datasets that mimic device behavior and diverse IoT environments, including failure scenarios and device responses. This approach enables faster, safer, and more cost-effective testing cycles without dependency on live data.

2. Healthcare AI development: Healthcare organizations often struggle with manual clinical documentation processes that are time-consuming and prone to errors. Our synthetic clinical note generation for Mariana.ai leverages LLMs to produce de-identified, medically coherent patient data for training AI models. This approach accelerates healthcare AI innovation while ensuring HIPAA compliance and preserving patient privacy.

3. Customer service training: IT operations teams often face challenges in managing complex environments and responding to support tickets efficiently. Our Gen AI-enhanced operations automation system for GuidePad simplifies complex IT and data operations by automating platform workflows and enhancing usability. This solution reduces support ticket volumes by 40%, improves developer productivity by 35%, and enhances operational efficiency by 25%, all through intelligent, Gen AI-driven enhancements deployed on AWS Cloud Infrastructure.

LLM use cases in different industries

Large Language Models (LLMs) are revolutionizing industries by turning vast amounts of unstructured data into actionable insights. From healthcare to finance, manufacturing, and beyond, LLMs enable smarter decision-making, automation of complex workflows, and improved operational efficiency.

LLM use cases in healthcare

Large language models are transforming healthcare by enabling faster, more accurate diagnosis, predictive health monitoring, and streamlined administrative workflows. AI-driven insights help clinicians focus on patient care rather than data management.

1. Clinical decision support systems

Physicians often juggle fragmented patient records, lab results, and diagnostic reports across multiple systems, losing precious time in critical decisions. Our AI-powered clinical decision support system helped Atria Co-Pilot unify these sources into one contextual dashboard, surfacing risk factors, treatment history, and key correlations instantly. This intelligent assistant enables 99% faster decision-making, reduces diagnostic errors, and lets clinicians spend more time with patients rather than chasing data.

2. Longitudinal patient data copilot

Clinicians at Max Healthcare were hampered by siloed systems and lacked tools to query patient histories across time, making trend detection and chronic-care planning slow and manual. Our generative AI copilot for longitudinal patient data lets medical teams ask natural-language questions like “Which diabetic patients over 40 had rising HbA1c last year?” and instantly view diagnosis trends, patient journeys, and cohort analytics in Max Healthcare. This solution boosted clinical insight effectiveness by 85%, cut manual effort dramatically by 70%, and improved decision-making responsiveness by 90%.

3. Preventive healthcare and personal monitoring

Traditional healthcare models wait for symptoms to appear rather than catching warning signs early, often leading to reactive interventions. Our LLM-powered preventive health monitoring framework ingests wearable metrics, lifestyle logs, and environmental data through a multi-agent architecture, enabling personalized alerts and risk predictions in MIRA. This proactive system achieved a 90% reduction in cardiac risk, empowering users to adopt healthier behaviors and enabling providers to intervene before conditions worsen.

LLM use cases in finance and insurance

The financial industry thrives on information, yet analysts often struggle with siloed datasets, complex risk models, and time-intensive reporting. LLMs are addressing these challenges by enabling real-time financial insights, intelligent risk detection, and automation across investment, banking, and insurance operations.

1. Portfolio management and asset analysis

Financial analysts often face information overload, managing thousands of securities, market reports, and portfolio dependencies while trying to anticipate market movements. Our AI-powered portfolio intelligence system helped Corbin Capital streamline this process by continuously monitoring global financial data, identifying correlations, and simulating “what-if” scenarios across assets. It provides dynamic strategy recommendations based on real-time market trends, risk exposure, and historical performance. This intelligent agent enabled 99% faster portfolio design and 90% quicker market assessments and drove a 6% increase in revenue within three months, allowing analysts to focus on higher-value investment strategies instead of data aggregation.

2. Financial data analytics and real-time insights

In traditional financial setups, teams depend heavily on data engineers to extract insights from massive datasets using complex SQL queries. Our natural language–driven financial analytics agent revolutionizes this process by converting plain English queries into precise SQL commands and generating instant visualizations and dashboards for Indus Valley Partners. Analysts can now ask, “Show top-performing funds by volatility in Q4,” and receive results in seconds. This solution has democratized access to financial intelligence, resulting in faster decision-making, improved client satisfaction, and a more data-empowered workforce.

3. Insurance automation

Manual underwriting and claims processing often delay customer service and increase error rates in the insurance industry. Our LLM-powered insurance automation platform helped Ledgebrook to extract data from unstructured policy documents, assess risks, classify businesses, and calculate premiums automatically. It ensures consistent, transparent, and audit-ready decision-making across underwriting workflows. This automation achieved an 80% faster turnaround time for policy issuance and claims, improved pricing precision, and delivered a noticeably better customer experience across the value chain.

LLM use cases in manufacturing

LLMs enhance manufacturing by optimizing procurement, supply chains, IoT operations, and financial planning. AI-driven insights improve efficiency, reduce costs, and enable faster decision-making.

1. Intelligent procurement and supply chain

Large manufacturers often struggle to manage hundreds of global vendors, volatile commodity prices, and complex shipment schedules, leading to inefficiencies in sourcing and procurement planning. Our AI-powered intelligent procurement and supply chain optimization system streamline this process by ingesting live vendor data, market price feeds, and logistics updates to provide real-time recommendations in Arcelor Mittal Nippon Steel. It identifies the best sourcing mix, predicts material shortages, and optimizes freight routes automatically. This solution achieved a 65% reduction in planning effort and a 60% improvement in forecast, resulting in optimized operations and stronger supplier collaboration.

2. Financial planning and executive decision support

Global organizations like Bosch manage vast financial data across regions, systems, and currencies, often creating delays in executive decision-making and strategic planning. Our LLM-driven financial planning and insights platform consolidates and normalizes data from multiple ERP and financial systems, highlights emerging trends, and provides real-time dashboards for leadership teams at Bosch. Executives can query performance drivers in natural language and instantly receive contextual insights for planning and forecasting. The system has delivered 10× faster decision-making, 80% quicker access to insights, and dramatically enhanced strategic and operational planning efficiency.

3. IoT data intelligence for oil and energy

Energy companies face the constant challenge of analyzing vast IoT data streams from oil rigs, sensors, and field equipment to ensure safety and efficiency. Our generative AI-based IoT data intelligence framework helped Enfinte Technologies to automate anomaly detection, predictive maintenance, and operational optimization by interpreting millions of real-time signals from sensors. It provides engineers with actionable insights, reducing downtime and enhancing safety in high-risk environments. This deployment led to a 50% improvement in productivity, 25% faster extraction rates, and a significant reduction in operational disruptions, while promoting more sustainable and efficient energy production.

LLM use cases in technology

From SaaS automation to intelligent data analytics, LLMs are redefining how technology platforms operate. They power everything from software development assistants and bug detection agents to documentation copilots, IoT intelligence, and workflow automation frameworks. By embedding Gen AI into core systems, tech enterprises achieve faster development cycles, smarter data utilization, and more adaptive digital experiences, making innovation continuous and intelligent at every layer.

1. Enterprise knowledge management

Enterprises struggle with massive document repositories, technical PDFs, HTML help files, and DITA guides scattered across teams, making it difficult to find accurate information. Our knowledge graph-powered RAG and search intelligence framework enables Tungsten Automation’s users to query enterprise content using natural language, returning context-aware answers across millions of documents. This approach improved information retrieval accuracy by 85%, reduced search time by 70%, and boosted certification readiness by 60%.

2. Clinical documentation automation platform

Managing structured and unstructured healthcare documentation is complex, time-consuming, and prone to human error. Our LLM-driven documentation and workflow automation platform processes clinical notes, medical transcripts, and coding data using contextual understanding and structured data extraction for Mariana.ai. By combining domain-specific LLMs with workflow automation, it standardizes documentation, reduces redundancy, and ensures compliance across records. This solution achieved a 60% reduction in administrative workload, improved accuracy in clinical documentation, and enhanced end-user productivity, demonstrating how intelligent automation can scale across SaaS ecosystems beyond healthcare.

3. Software quality automation

Engineering teams spend extensive time identifying, replicating, and fixing bugs across development pipelines, leading to delays and quality issues. Our Gen AI-powered SDLC automation agent connects to Jira, Git, and SonarQube to automatically detect, classify, and resolve code-level issues. It provides proactive fix recommendations and improves test coverage visibility of Dev-Plaza. This led to a 40% reduction in debugging effort, 25% faster sprint completion, and measurable improvement in software quality across projects.

LLM use cases in education

Large language models are transforming learning and academic workflows by providing personalized tutoring, automated content generation, and intelligent student support. From exam preparation assistants to adaptive learning platforms, LLMs enable educators and learners to access real-time insights, improve comprehension, and enhance engagement, making education more efficient, personalized, and scalable.

1. Intelligent learning assistant

Students preparing for competitive exams often face limited access to real-time, high-quality problem-solving support. Our LLM-powered learning and doubt-solving assistant for DoubtBuddy helps in fine-tuning models to deliver accurate, step-by-step explanations to complex exam questions. With 95% response accuracy and adaptive guidance, it improved exam prep efficiency by 80%, student confidence by 60%, and engagement across 1M+ queries.

2. Interactive content generation

Educational publishers face challenges in creating engaging content that captures learners’ attention. The AI-powered educational storytelling engine for Ojje to generate end-to-end storylines, visuals, and interactive books in minutes. Reducing production time by 30% and boosting reader engagement by 90%. This Gen AI solution revolutionized children’s learning experiences through immersive, personalized narratives.

LLM use cases in retail and e-commerce

Large language models help retailers and e-commerce platforms deliver hyper-personalized shopping experiences, optimize inventory management, and automate customer support. By analyzing user behavior, product data, and market trends, LLMs enable intelligent recommendations, dynamic pricing, and efficient supply chain operations, boosting engagement, sales, and operational efficiency.

1. AI-powered search and recommendation

Shoppers often receive generic product results that ignore personal context and intent. Our context-aware recommendation engine prioritizes Techatomic’s user needs over profit-driven algorithms, offering fair, relevant, and ethical search outcomes. This platform improved conversion rates by 35%, reduced search abandonment by 50%, and enhanced personalized discovery for millions of users.

2. Store performance and analytics intelligence

Retailers with multiple outlets struggle to track performance, inventory, and conversion trends effectively. Our Gen AI-powered retail analytics agent ingests store data across parameters like sales, inventory, and traffic to provide real-time actionable insights in Taascom. It achieved a 65% increase in store productivity, 40% higher revenue, and smarter inventory optimization for small to mid-sized retailers.

3. Personalized fashion assistant

Fashion retailers need hyper-personalized shopping experiences to match evolving consumer expectations. Our Gen AI-driven fashion intelligence engine helped Modamia to enable virtual try-ons, custom outfit recommendations, and brand-aligned designs in real time. This digital stylist improved buyer journey speed by 80%, reduced returns by 30%, and increased overall purchase satisfaction across luxury shoppers.

LLM use cases in government and public sector

Large language models empower government agencies to streamline public services, enhance citizen engagement, and improve policy analysis. By automating document processing, regulatory compliance, and real-time data insights, LLMs enable faster decision-making, transparent governance, and efficient delivery of public programs.

1. Intelligent transportation analytics

Large-scale transportation systems deal with massive, disconnected data across vehicles, routes, and infrastructure. Our AI-powered master data management and analytics solution automates data integration and delivers unified insights for public transport optimization in Dubai RTA. It led to 80% faster data preparation, 60% improved reporting accuracy, and better policy planning for urban mobility.

2. Smart public transit prediction

Urban transit networks need accurate predictions for vehicle arrivals and route efficiency. Our AI/ML-based ETA prediction infrastructure uses geospatial, weather, and event data to optimize bus arrival times in Chalo. It improved commuter satisfaction, reduced operational delays, and enhanced transit reliability across thousands of routes in real time.

LLM use cases for different job roles

Large Language Models are transforming how professionals across roles work, think, and make decisions. From automating repetitive tasks to generating insights and recommendations, LLMs act as intelligent copilots that augment human expertise. Whether it’s analysts, marketers, developers, or executives, every role can leverage LLMs to enhance productivity, accuracy, and creativity while enabling faster, data-driven decision-making.

LLM use cases for finance

1. Intelligent financial planning and executive decision support

Global enterprises often face fragmented financial data across systems, slowing scenario analysis and decision-making. Our Gen AI-powered financial analysis copilot for Bosch unifies data and enables executives to ask natural language questions like “Compare YTD revenue trends across regions” to get instant insights. The solution delivered 60% faster decisions, 35% more scenario-based insights, and 30% higher adoption of AI-driven analytics, empowering real-time strategic agility.

2. Real-time portfolio analysis and asset management

The NLP-powered generative AI copilot we developed for Corbin Capital delivers real-time portfolio intelligence by ingesting structured and unstructured financial data across documents, spreadsheets, scanned reports, and audio transcripts. Portfolio managers can query historical data, compare holdings, run mathematical functions, and extract asset-specific insights using natural language. The results were faster access to portfolio insights, streamlined workflows, enhanced collaboration across teams, and more reliable, accurate decision-making for high-value client portfolios.

LLM use cases for developers

1. Automated bug detection and code quality

Software development teams at Dev-Plaza faced challenges in identifying bugs early and maintaining consistent code quality across large repositories. Our agentic AI-powered SDLC infrastructure analyzes data across Jira, Git, GitActions, and SonarQube to predict bugs before production, provide fix recommendations, and identify refactoring opportunities. This approach accelerates development cycles and significantly reduces production defects.

2. Natural language to SQL automation

Data analysts at OpenAsset struggled with time-consuming query creation and limited access to technical data insights. Our natural language query automation engine convert plain-text questions into accurate SQL queries, enabling users to interact seamlessly with structured data. This solution enhances processing efficiency and accuracy, empowering non-technical teams with self-service analytics.

3. IT support automation and developer productivity

Engineering teams at GuidePad experienced delays in resolving routine IT and support queries, impacting developer productivity. Our Gen AI-powered L1/L2 support automation system autonomously handles repetitive tickets and searches across multiple documentation sources in real time. This reduces manual workload, improves support responsiveness, and boosts overall development efficiency.

LLM use cases for sales

1. Property leasing co-pilot and lead management

Real estate teams at Unity AI faced inefficiencies in managing high inquiry volumes and coordinating property viewings. Our Gen AI-powered leasing co-pilot automates lead qualification, provides precise property recommendations, and schedules appointments autonomously. This resulted in a 40% reduction in response time, 60% increase in conversions, and a 90% shorter sales cycle, transforming lead management efficiency and customer engagement.

2. Solar energy inside sales agent

Sales representatives at Neighborhood Sun Solar struggled to manage customer interactions across multiple touchpoints during the sales cycle. Our Gen AI-powered inside sales agent tracks prospects throughout their buying journey, answering questions about products, incentives, and maintenance instantly. This led to enhanced engagement, improved customer experience, and a significant boost in conversion rates through real-time, personalized support.

3. AI-powered customer journey analytics

Marketing and sales teams at Kinetik Solutions needed deeper visibility into customer behavior and buying group dynamics. Our AI-driven GTM analytics solution analyzes engagement data, identifies buying groups, and uncovers behavioral patterns to drive targeted outreach. This delivered a 35% increase in conversion rates and shorter sales cycles, enabling smarter engagement and higher revenue impact.

LLM use cases for marketing

1. Hyper-personalized marketing content

Marketing teams at Solarplexus needed a scalable way to create personalized, region-specific campaign materials in real time. Our Gen AI-powered content generation platform, built using Claude and Midjourney, produces localized, hyper-personalized marketing collateral tailored to audience segments and geographies. This drove a 30% outreach conversion rate (up from less than 2%) within six months, significantly improving marketing ROI and customer engagement.

2. AI-powered brand collateral creation

Design and marketing teams at Mahindra & Mahindra faced delays in producing brand-aligned visual assets at scale. Our AI-driven design automation tool generates personalized ads, images, and videos in real time while ensuring brand consistency and creative quality. This resulted in 90% faster ad creation and substantial cost savings, enabling agile, data-driven marketing operations.

3. Personalized content and travel itineraries

The content and editorial team at Curly Tales needed an intelligent solution to automate itinerary generation and personalized content creation. Our Gen AI-based content automation platform streamlines the creation of travel guides, recommendations, and multimedia posts. This achieved a 70% reduction in content generation time, a 60% boost in engagement, and a 30% increase in conversions, transforming digital storytelling and audience reach.

LLM use cases for operations

1. Intelligent work order management

Operations teams at Dairyland Power struggled with manual work order handling, causing delays and compliance challenges. Our multi-agent AI system automates work order evaluation, enrichment, and verification across the maintenance lifecycle. This led to a 45% reduction in errors, 100% compliance, and significantly faster project completion through real-time intelligence and workflow optimization.

2. Master data management for transportation

The Roads and Transport Authority (RTA) of Dubai needed a unified data ecosystem to manage massive information silos across thousands of systems. Our AI-powered master data management solution integrates and harmonizes data from over 2,800 repositories, delivering 80% faster data preparation and 60% improved reporting accuracy. This enhances operational visibility and enables smarter urban mobility planning.

3. Real-time public transit ETA prediction

Public transport operations at Chalo faced inconsistencies in bus arrival predictions due to dynamic traffic and weather conditions. Our AI/ML-powered ETA prediction infrastructure analyzes geospatial, traffic, and environmental data in real time to optimize route timings. This resulted in a 20% reduction in operational costs and improved passenger satisfaction through accurate, real-time transit updates.

LLM use cases for procurement

1. Intelligent raw material sourcing

Procurement teams at Arcelor Mittal Nippon Steel faced challenges managing data across 230+ global vendors and shipments. Our Gen AI-powered NLP agent unifies live vessel updates, material reports, and market data to provide real-time sourcing insights. This solution reduced planning effort by 65%, improved accuracy by 60%, and enabled 80% faster decision-making, optimizing global procurement operations.

2. Compliance and certification management

Manual audit and certification workflows often slow compliance teams. Our multi-agent framework for CertBuddy AI automates compliance audits, identifies missing parameters, and generates required documentation autonomously. The solution improved audit accuracy by 40%, enhanced efficiency by 50%, and made the entire process 99% faster, ensuring seamless regulatory readiness.

3. IT audit and compliance automation

Organizations often spend months conducting IT audits across systems. Our Gen AI compliance agent for Sagax Team automates audit workflows, performs system scoring, and flags non-compliance areas in real time. This reduced audit timelines by 70%, decreased human errors by 50%, and shortened compliance cycles from 3 months to under a week, driving faster, data-driven governance.

LLM use cases for supply chain

1. IoT-powered oil extraction optimization

Oil and energy operators at Enfinite Technologies faced challenges analyzing massive IoT data streams from oil wells and rigs. Our knowledge extraction engine interprets real-time sensor data to identify anomalies and optimize extraction operations. This Gen AI-driven system improved productivity by 50%, reduced extraction time by 25%, and enhanced overall operational safety and efficiency.

2. Real-time supply chain intelligence

Global supply chain teams at Arcelor Mittal Nippon Steel needed faster insights across 230+ vendors and live vessel data sources to make informed sourcing decisions. Our Gen AI-powered NLP agent enables natural language queries for real-time visibility into vendor performance, shipment status, and material availability. This solution reduced planning effort by 65%, improved forecast accuracy by 60%, and accelerated strategic decision-making across procurement operations.

LLM use cases for HR

1. Relationship management and conflict resolution

Professionals at SurePeople faced challenges in managing interpersonal conflicts and maintaining team harmony. Our Gen AI-powered relationship advisor analyzes communication patterns and provides actionable guidance for navigating various professional and personal interactions. This solution reduced conflicts by 40% and accelerated resolution by 30%, fostering stronger relationships and smoother collaboration.

LLM use cases for customer success

1. Conversational product knowledge support

Technical teams at Tensor IOT struggled with timely resolution of product-related queries. Our NLP-powered AI system automates responses to technical questions, delivering instant, accurate answers across multiple channels. This solution reduced response time by 60%, improved customer satisfaction by 45%, and cut operational costs by 35%, enhancing overall support efficiency.

2. Real estate client engagement

Real estate agents at Unity AI faced delays in managing client inquiries and property recommendations. Our Gen AI-powered leasing co-pilot automates inquiry handling, provides tailored property suggestions, and schedules appointments seamlessly. This solution reduced response time by 40% and boosted conversion rates by 60%, streamlining the client engagement process.

LLM use cases for data analytics

1. Real-time banking analytics and fraud prevention

Banking teams at Miden faced challenges in monitoring transactions and detecting fraud efficiently. Our AI-powered analytics platform leverages Python and AWS to deliver real-time insights, optimize transaction monitoring, and generate intelligent dashboards for reporting. This solution reduced fraud detection time by 82% and enhanced scalability, improving overall financial operations and risk management.

2. Master data management and transportation analytics

The Dubai RTA faced challenges in managing and analyzing data across 2,800+ repositories for transportation operations. Our AI-powered solution automates data extraction, processing, and insights generation, enabling faster, more accurate decision-making. This implementation achieved 80% faster data preparation and improved operational efficiency, transforming transportation analytics through Gen AI-powered data mapping and master data management.

3. Gen AI-powered query automation

Analysts at OpenAsset experienced slow, complex data retrieval from Aurora DB. Our NLP-powered Text-to-SQL engine converts natural language questions into SQL queries, cutting processing time by 40% and improving accuracy by 35% for seamless, efficient data interactions.

Emerging trends that will impact LLM use cases

LLM use cases adoption is evolving with trends like multimodal models that combine text, image, and speech understanding, and agentic AI that enables autonomous decision-making. The shift toward domain-specific, lightweight models, along with advances in privacy-first architectures and synthetic data generation, is making LLMs more scalable, secure, and impactful across industries.

1. Agentic AI: autonomous workflows

Nearly 90% of technology leaders plan to increase their AI budgets in the coming year, driven by the rapid rise of agentic AI, systems capable of reasoning about goals, planning multi-step workflows, and executing tasks autonomously. According to Gartner, by 2026, 40% of enterprise applications will feature embedded AI agents, signaling a fundamental transformation toward intelligent, self-directed automation across industries.

2. Code generation as killer app

Code generation transformed software development into a $1.9 billion ecosystem in one year. Claude captured 42% market share. Capabilities include full-stack generation, legacy modernization, intelligent debugging, and security vulnerability detection. Enterprise teams see 30-50% faster development cycles.

3. Multimodal intelligence standard

GPT-5, Gemini 2.5 Pro, and Claude 4 process text, images, audio, and video seamlessly. Applications span medical image analysis, visual quality inspection, property validation, and interactive content generation. Cross-modal reasoning enables comprehensive analysis.

4. Extreme context windows

200K+ token models (Claude series) enable whole-codebase analysis, year-long patient histories, and comprehensive contract review. Eliminates information loss from chunking and enables truly comprehensive insights.

5. Small language models for edge

Specialized models (Gemma, streamlined LLaMA) run on devices for complete privacy, zero latency, and cost efficiency. Use cases include HIPAA-compliant healthcare processing, real-time manufacturing quality control, and offline field operations.

6. Domain-specific fine-tuning

Specialized models for healthcare, finance, manufacturing, and legal achieve 40-60% better accuracy in domain tasks. 50% of financial digital work is estimated to be automated by 2025 through specialized LLMs.

7. Real-time knowledge integration

Live data integration through APIs, web search, and database queries eliminates outdated information. Financial markets use real-time price feeds, healthcare integrates current vitals, and supply chains track live logistics.

8. Hybrid reasoning architectures

Models like DeepSeek-R1 combine neural networks with symbolic reasoning for both intuitive and rigorous problem-solving. Applications include mathematical proofs, legal reasoning, and scientific research with verified accuracy.

9. Intelligent model routing

Organizations achieve 50-70% cost reductions by routing queries to appropriate models based on complexity. Simple questions go to lightweight models, complex reasoning to premium models, without quality degradation.

10. Composable AI architectures

Modular, API-first designs enable 80% faster feature implementation. Organizations avoid vendor lock-in, rapidly adapt to new models, and optimize costs through component-level substitution.

11. Embedded compliance

Automatic PII detection, complete audit trails, bias monitoring, and data residency controls built into architectures. Constitutional AI ensures safety and alignment from the ground up.

12. Human-ai collaboration

The future is augmentation, not replacement. AI handles routine tasks, escalates complex decisions, and provides decision support. 96% of enterprise IT leaders plan to expand AI agent use, focusing on workflows where humans supervise strategic decisions while AI manages execution.

How to implement your LLM use cases

The biggest LLM and AI challenge lies in execution: too many pilots, not enough scale to production. Most initiatives stall because scalability, governance, and enterprise integration aren’t built in from the start.

Transforming an LLM use case from concept to production requires engineering excellence. In our experience, companies that succeed follow a structured approach that balances innovation with practical results.

Step 1: 4-Day POC

Begin with a full-day Gen AI discovery workshop led by our experts. In just 4 days, receive a comprehensive roadmap and a Proof of Concept for your highest-priority LLM use case.

Step 2: 4-Week Pilot

We conduct a deep-dive discovery, design a business case, and select the right LLM or Gen AI model for your needs. In 4 weeks, you get a fully functional, tested pilot ready for stakeholder validation.

Step 3: 4-Month Implementation

We establish governance, compliance, and security guardrails, ensuring enterprise readiness. In 4 months, your LLM-powered application moves seamlessly from pilot to production.

Step 4: Long-Term Engagement

Scale effortlessly with a dedicated AI Ops team driving continuous innovation. Within 3 days, we deploy AI specialists to collaborate on your long-term roadmap of LLM and Gen AI use cases, ensuring sustained success and growth.

Ready to move beyond pilots?

Our proprietary AI acceleration framework can enable your AI journey through four proven stages: Discovery (1 day) → Design (4 days) → Development (4 weeks) → Deployment (4 months), enabling 10X faster implementation with 50+ successful deployments.

.png)