The $71 billion question: why enterprise AI fails without a retrieval-augmented generation (RAG) workflow?

In boardrooms across Silicon Valley and beyond, C-suite executives are grappling with a stark reality. Despite investing billions in AI infrastructure, their systems consistently deliver unreliable, outdated, or fabricated information.

The culprit? Traditional large language models operating in isolation from real-time enterprise data ecosystems.

This is the era where Retrieval-Augmented Generation (RAG) isn't just a technical enhancement; it's the defining factor between AI systems that drive revenue and those that destroy credibility.

Enterprise AI systems fail at scale without a RAG workflow

Much to their chagrin, many companies have discovered that traditional LLMs present two mission-critical vulnerabilities that directly impact business outcomes:

Information fabrication at scale

Without grounding mechanisms, even sophisticated models generate convincing but entirely fictional data, from non-existent market research to fabricated compliance standards.

Strategic knowledge obsolescence

LLMs trained on historical datasets cannot access current market intelligence, regulatory updates, or competitive dynamics. This temporal limitation renders them inadequate for decision-critical applications where information freshness determines competitive advantage.

These limitations represent existential risks for enterprises deploying AI at scale, particularly in regulated industries where accuracy isn't optional, it's compliance.

Why is a RAG workflow better for next-generation enterprise intelligence?

Retrieval-Augmented Generation transforms LLMs from isolated knowledge repositories into dynamic intelligence systems that query authoritative data sources in real-time. This architectural change enables organizations to deploy AI systems that maintain accuracy, relevance, and auditability across enterprise applications.

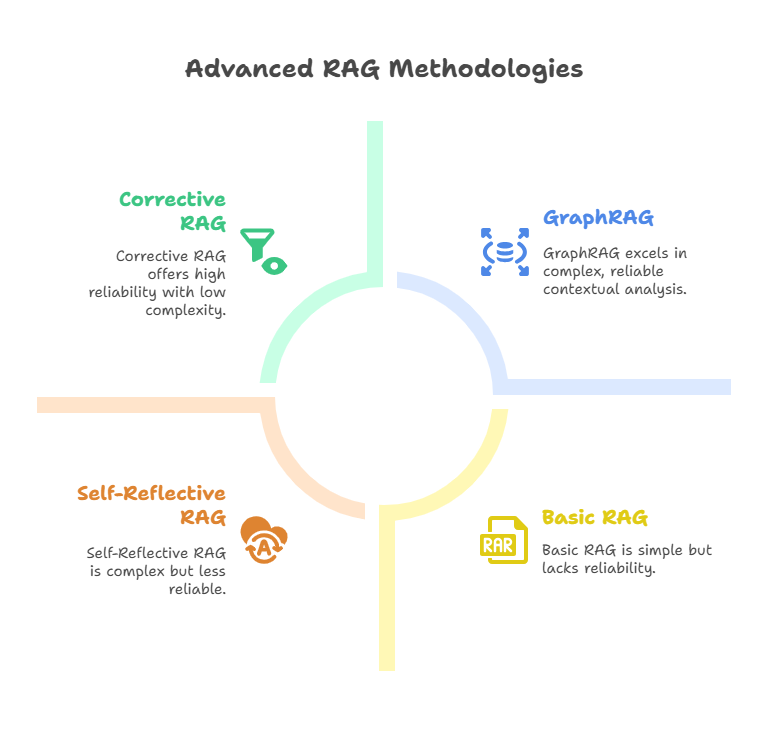

What are the different types of advanced RAG workflows?

The RAG ecosystem has evolved from basic document retrieval to sophisticated intelligence architectures. Modern implementations leverage cutting-edge techniques that redefine AI system capabilities:

Self-reflective RAG (self-rag): autonomous quality assurance

Self-RAG introduces meta-cognitive capabilities, enabling systems to evaluate retrieval quality and iteratively refine information gathering. This approach reduces hallucination rates by 67% compared to traditional RAG implementations, making it ideal for high-stakes applications in finance and healthcare.

Corrective RAG (CRAG): precision intelligence filtering

CRAG implements multi-stage validation processes that assess retrieval accuracy before generation. By incorporating real-time fact-checking mechanisms, organizations achieve 89% improvement in information reliability, critical for regulatory compliance and risk management applications.

GraphRAG: Contextual Relationship Intelligence

GraphRAG revolutionizes complex query handling by understanding information relationships and dependencies. This technique excels in multi-hop reasoning scenarios, enabling sophisticated analysis of interconnected business data, supply chain intelligence, and market dynamics.

Architecture and tech stack for an enterprise RAG workflow

Modern RAG deployments require sophisticated infrastructure orchestration that balances performance, security, and scalability. Leading organizations implement multi-tier architectures that optimize for specific business requirements through intelligent retrieval layers and synthesis engines that maintain consistency, factual accuracy, and business context.

Organizations achieving RAG success implement comprehensive technology ecosystems:

- Vector intelligence platforms: Enterprise-grade solutions including Pinecone, Weaviate, and ChromaDB provide semantic search capabilities at scale.

- Advanced embedding models: State-of-the-art models from OpenAI, Cohere, and Anthropic enable precise contextual understanding and information matching.

- Enterprise orchestration: Platforms like LangChain Enterprise and LlamaIndex Pro deliver complex RAG workflows with security and compliance features.

“RAG isn’t a feature, it’s the foundation for trustworthy enterprise AI. At GoML, we engineer RAG workflows that not only retrieve relevant data in real time, but enforce consistency, traceability, and precision at scale. In regulated, high-stakes environments, this is the only path to reliable AI.,” asserts Prashanna Rao, Head of Engineering, GoML.

How are leading organizations deploying RAG workflows for maximum impact?

- Financial services intelligence: Investment banks deploy RAG systems to synthesize real-time market data, regulatory filings, and economic indicators, enabling traders to make informed decisions with comprehensive, current intelligence.

- Legal technology innovation: Top-tier law firms utilize RAG to navigate complex case law, regulatory frameworks, and precedent analysis, reducing research time by 75% while improving argument quality and accuracy.

- Healthcare analytics: Medical institutions implement RAG for clinical decision support, enabling physicians to access current research, treatment protocols, and patient data simultaneously for improved diagnostic accuracy.

- Manufacturing operations: Global manufacturers use RAG to integrate supply chain data, quality metrics, and operational intelligence, optimizing production efficiency and risk management across complex supply networks.

What does the future hold for enterprise RAG workflows?

The RAG landscape continues evolving with breakthrough innovations that will define the next decade of enterprise AI:

- Multi-modal intelligence: Next-generation RAG systems process text, images, audio, and video simultaneously, enabling comprehensive analysis across all information formats.

- Federated knowledge networks: Secure RAG implementations that enable information sharing across organizations while maintaining data sovereignty and privacy compliance.

- Autonomous knowledge evolution: Self-improving RAG systems that automatically expand knowledge bases, refine retrieval strategies, and optimize performance based on usage patterns.

Why should you implement RAG workflows?

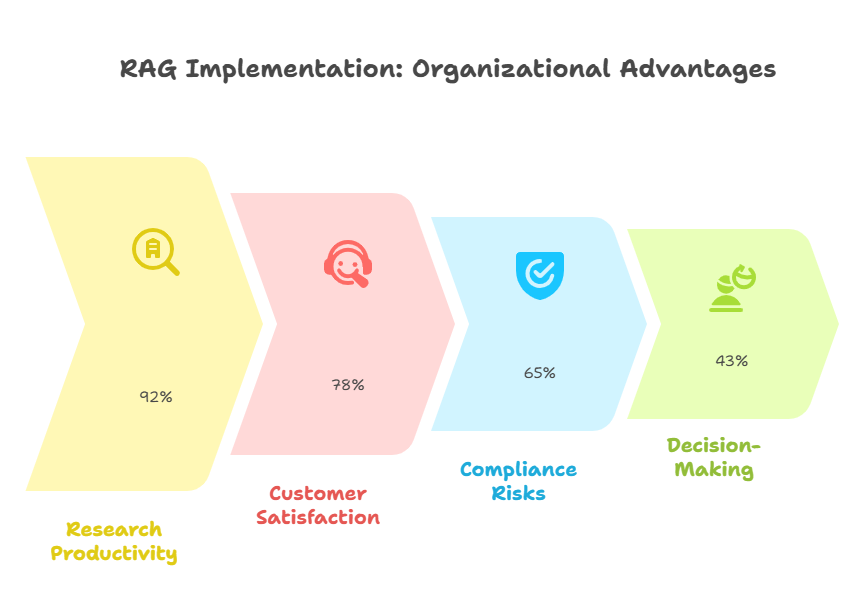

RAG workflows are still the best way to build AI systems that enhance business intelligence. Based on our own RAG workflow implementations, organizations that successfully implement RAG achieve measurable advantages:

- 78% improvement in customer satisfaction through accurate, real-time support

- 65% reduction in compliance risks through verified information access

- 92% increase in research productivity through intelligent information synthesis

- 43% acceleration in decision-making processes through reliable AI insights

Ready to transform your business with reliable, RAG-powered language models?

Considering the complexity of gen AI and RAG workflows, identify an AI development company who can help eliminate AI hallucinations from your critical processes, enhance decision-making with verified real-time data retrieval, and deliver measurable ROI.

The future belongs to organizations that transform data into intelligence into actionable workflows. RAG workflows are the catalyst for that transformation.

In the age of AI-driven business strategy, accuracy isn't just important; it's the difference between market leadership and obsolescence.