Across Gen AI workloads, teams building retrieval-augmented generation systems often observe a peculiar pattern. You notice that early tests look solid. Internal demos perform well. Answers sound grounded and confident. Then when the system moves into real usage, the document set grows and accuracy starts slipping in different ways.

The responses still read well. The structure looks correct. Yet users begin to flag answers as incomplete, outdated or contextually wrong.

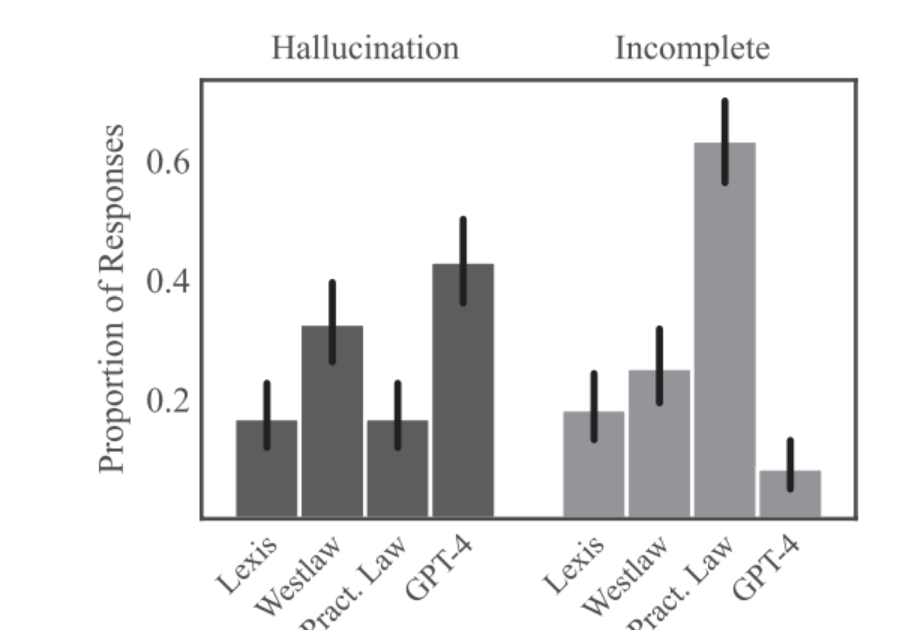

A recent Stanford AI research paper carried out on legal work explains why this happens.

The issue is not prompt quality or model size. It is not a lack of data. It is how retrieval behaves when systems scale beyond certain limits.

Why this research matters beyond legal AI

The Stanford paper is framed around legal AI, but the failure mode applies to nearly every large-scale RAG system in production today.

Enterprise teams use RAG to search internal knowledge bases, power support assistants, summarize research and answer operational questions. These systems often index thousands or millions of documents into a single vector database and rely on semantic similarity to retrieve context.

This design works at small scale. It becomes unreliable as collections grow and diversify.

The Stanford findings are important because they show that this breakdown is predictable. It is not an edge case and not the result of misuse. It is a structural limitation.

What the Stanford researchers studied

Researchers from Stanford University evaluated retrieval-augmented systems operating over large document collections. Their work was published in the Journal of Empirical Legal Studies.

The systems followed standard RAG architecture. Documents were embedded into high-dimensional vector spaces. Queries retrieved nearby documents based on semantic distance. Language models generated answers using the retrieved context.

At small scale, retrieval behaved as expected. Relevant documents clustered together, and generated answers aligned with source material.

As the document set expanded, performance declined sharply. Once collections crossed roughly ten thousand documents, retrieval precision began to drop. Beyond fifty thousand documents, semantic search accuracy collapsed in a measurable way. In several evaluations, it performed worse than traditional keyword search.

The decline was consistent and repeatable.

Semantic collapse and why retrieval fails at scale

The Stanford study points to a geometric limitation in high-dimensional vector spaces.

When embeddings are sparse, distance is meaningful. Similar documents sit closer together. Irrelevant ones are farther away. As more vectors are added, the space becomes crowded. Distances begin to converge. Semantic distinctions weaken.

Researchers refer to this phenomenon as semantic collapse.

When semantic collapse sets in, retrieval can no longer reliably separate what matters from what merely sounds similar. Documents from different domains, time periods, or contexts appear equally relevant to a query. The system loses its ability to filter.

This behavior is not specific to legal data. It applies to any RAG system that pushes large, mixed corpora into a single vector space.

Why RAG failures often look correct

One of the most dangerous aspects of large-scale RAG failure is how convincing the outputs appear. The language model is not inventing content randomly. It is synthesizing answers from retrieved documents that are semantically similar but contextually wrong.

In legal systems, this shows up as real cases applied to the wrong jurisdiction or outdated rulings treated as current law. In enterprise systems, it appears as old policies presented as valid guidance or internal notes treated as official decisions.

From the model’s perspective, the inputs look legitimate. The failure has already happened at retrieval.

This is why hallucinations at scale are systematic rather than sporadic. The system repeats the same type of mistake because retrieval is consistently pulling the wrong kind of context.

The architectural assumption behind the problem

Most production RAG systems make the same foundational assumption. All documents can live in a single, ever-growing vector index.

This simplifies ingestion and retrieval logic. It also hides the risk.

As the corpus grows, retrieval noise increases faster than signal. Adding more data does not restore accuracy. Expanding context windows does not fix relevance. Larger models improve fluency, not retrieval quality.

The Stanford research shows that scale alone exposes the limits of this architecture.

How to fix RAG systems at scale

The solution is not complex, but it requires architectural discipline.

Instead of one massive vector space, document collections need to be split into multiple bounded groups. Each group should be capped at around ten thousand documents. At this size, semantic distance remains meaningful and clustering behavior stays intact.

These groups should reflect how the underlying domain actually works. Jurisdictions, business units, time ranges, product lines, or content types should not compete with each other during retrieval. Separation reduces noise before search even begins.

This design choice restores retrieval precision without relying on heavier models or longer prompts.

Why routing matters as much as segmentation

Segmentation alone is not enough. A routing layer is required to decide which document groups are relevant for a given query.

Before retrieval occurs, the system should infer basic constraints such as domain, time relevance, or category. Only the appropriate groups should be searched.

Routing narrows the search space and protects retrieval quality. It ensures that the language model receives focused, coherent context rather than a diluted mix of loosely related material.

In practice, even simple routing logic can dramatically improve system accuracy when combined with bounded document sets.

What teams should focus on when building RAG systems

Teams building large-scale RAG workflows should prioritize retrieval quality over generation polish. This starts with strong metadata. Documents need clear attributes that support segmentation and routing. Buckets must enforce size limits rather than growing indefinitely. Retrieval accuracy should be measured directly, not inferred from how fluent answers sound.

When retrieval is stable, generation improves naturally. When retrieval fails, no amount of generation tuning can compensate.

The takeaway

The Stanford findings clarify what determines whether RAG systems hold up in production.

Retrieval quality depends on respecting the mathematical behavior of vector search and designing architectures that operate within those constraints. Bounded corpora, clear separation of domains, and routing before retrieval preserve semantic structure as systems scale.

RAG systems that remain reliable treat retrieval as a first-class engineering problem. They accept limits early and design around them. When retrieval stays precise, generation stays grounded.

This principle shapes how GoML builds AI systems in the first place. We start with constrained retrieval, strong metadata, and routing aligned with real-world structure. The result is accuracy that scales with usage rather than errors that scale with data. That is how RAG works at scale.

.png)