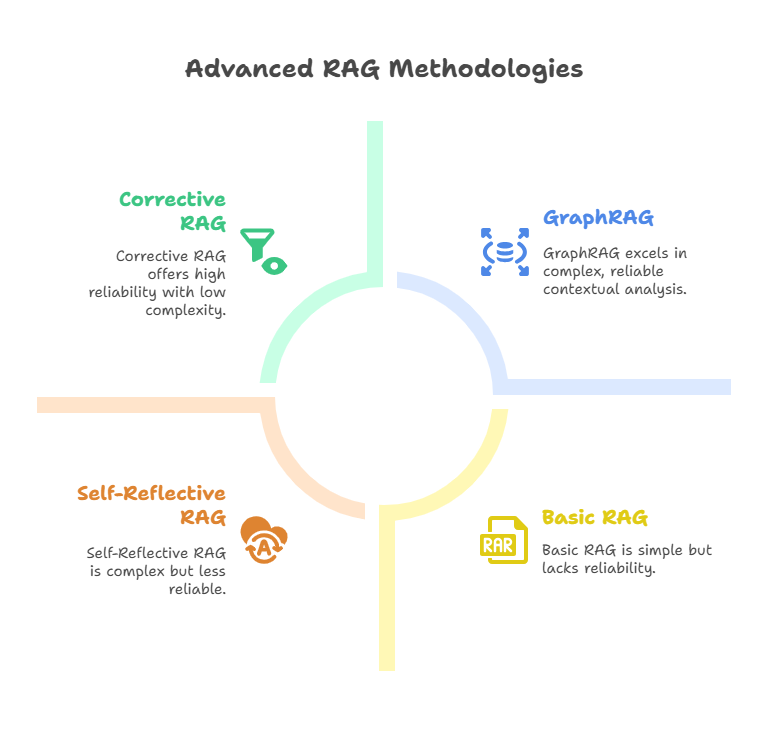

Enterprises are discovering that traditional LLMs often hallucinate or provide outdated information, leading to poor decisions and compliance risks. Retrieval-Augmented Generation (RAG) solves this by grounding AI in real-time, trusted enterprise data. Advanced RAG workflows like Self-RAG, CRAG, and GraphRAG reduce hallucinations, ensure precision, and support complex reasoning. With platforms like Pinecone, OpenAI embeddings, and LangChain, enterprises are building scalable RAG architectures. Results include a 78% boost in customer satisfaction, 65% compliance risk reduction, and 92% productivity gains.

As AI advances, RAG is emerging as the critical foundation for enterprise-grade intelligence, ensuring trustworthy, real-time decision support across finance, law, healthcare, and manufacturing.

.png)

.png)