Lyzr.ai, an Antler-backed enterprise generative AI platform, built NeoAnalyst an AI data analyst platform that enables business leaders to query data using natural language. Initially powered by GPT-4, the platform delivered strong analytics capabilities, but enterprise customers demanded an open, self-hosted AI data analyst solution that aligned with GDPR and SOC2 compliance.

The problem: compliance gaps, rising costs, and limited control

While NeoAnalyst’s GPT-4 backend delivered strong performance, enterprise adoption hit a roadblock. Customers increasingly demanded an open, self-hosted AI data analyst solution to gain greater control over their deployments and reduce dependency on proprietary APIs. Compliance requirements such as GDPR for data privacy and SOC2 for security, confidentiality, and operational integrity further constrained the platform’s scalability.

Additionally, the GPT-4 API’s high usage costs placed pressure on margins, and its closed architecture limited fine-tuning flexibility, a critical need for orchestrating multiple AI agents in NeoAnalyst’s advanced analytics workflows. As document volumes, query complexity, and compliance demands grew, the existing architecture became a bottleneck, slowing time-to-market and eroding enterprise confidence.

The solution: AWS-native migration to fine-tuned LLaMA2

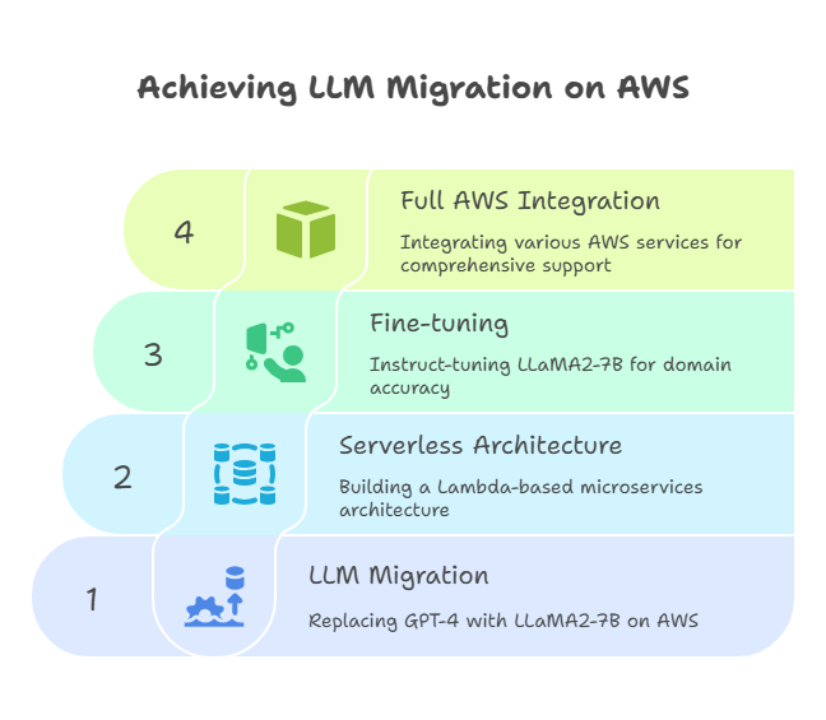

GoML delivered an end-to-end LLM migration and optimization pipeline leveraging AWS services to ensure scalability, compliance, and cost efficiency for the AI data analyst platform.

LLM migration to LLaMA2 on AWS

- Replaced GPT-4 with LLaMA2-7B, hosted natively on AWS.

- Built a serverless, Lambda-based microservices architecture for high availability and cost efficiency of the AI data analyst application.

Fine-tuning for domain accuracy

- Instruct-tuned LLaMA2-7B to match and exceed GPT-4 output quality.

- Optimized for enterprise AI data analyst workflows and natural language querying of analytics data.

Full AWS integration

- UI and Routing: Amazon Route 53 for DNS and high reliability of AI data analyst services.

- API and Compute: Amazon API Gateway + Elastic Load Balancer + AWS Lambda for routing and execution.

- Storage: Amazon S3, RDS, DynamoDB, and Redshift for storing AI data analyst datasets and outputs.

- Search and Analytics: Amazon OpenSearch for real-time search in AI data analyst results.

- ML Ops: Amazon SageMaker for model management; AWS Glue for data prep.

- Security: AWS IAM, WAF, and CloudWatch for monitoring and protection.

The impact: cost savings, uptime boost, and compliance-ready AI analytics

Lyzr.ai achieved measurable improvements:

- 30% reduction in operational costs for the AI data analyst platform post migration.

- 99% uptime (up from 80%) with AWS Lambda architecture.

- 8-week complete migration timeline, ensuring minimal disruption to AI data analyst services.

- Full GDPR and SOC2 compliance for enterprise AI data analyst deployments.

"With GoML, we migrated our AI data analyst from GPT-4 to LLaMA2 in just 8 weeks, reduced costs by 30%, and met enterprise compliance demands." — Jithin George, CTO, Lyzr.ai

Lessons for other organizations

Common pitfalls to avoid

- Treating AI data analyst migration as a simple API swap instead of a full architecture redesign.

- Overlooking fine-tuning requirements for domain-specific accuracy in AI data analyst tools.

- Neglecting compliance frameworks during AI data analyst hosting.

Advice for teams facing similar challenges

- Start with AWS-native architecture for AI data analyst scalability and control.

- Fine-tune open-source LLMs for AI data analyst accuracy and relevance.

- Integrate monitoring and security layers from day one.

Want to reduce AI operating costs by 30% while boosting compliance and uptime for your AI data analyst platform?

Let GoML help you migrate your solution to a more effective LLM.

.png)

.png)