TLDR: MIT say “95% of the GenAI Pilots failed in 2025”. Their solution? “Let’s build an internet of AI agents”, MIT Nanda to the rescue.

Some of you may recall McKinsey’s “$1 Trillion AI Productivity Gap” report from 2020, which claimed that most AI investments fail to deliver value. Their reasoning was fairly generic — lack of strategy, governance, prioritization, and, apparently, people not coming to the office on time (oops, that one’s on me). Right after that report, McKinsey began marketing the “QuantumBlack AI Transformation” offering, which promised to solve all those challenges — magically!! Why mention this? Let’s put a pin in it for now.

Back in August, MIT published a very insightful report (yes, sarcasm intended) stating that 95% of Gen AI pilots launched in H1 2025 failed to deliver measurable business outcomes. They attributed these failures primarily to organizational issues rather than technology (well, at least they got that part right). SHOCKING!! After three years of industry-wide obsession with Gen AI, MIT now says it’s all a waste — and everyone jumped on that bandwagon fast.

Harvard Business Review supported MIT’s findings, calling OpenAI’s GPT-5 launch underwhelming and labeling it a failed LLM. Gartner went even further, declaring that Gen AI is entering the “trough of disillusionment.” The Economic Times also added fuel to the fire in early September, claiming that the AI gold rush might be inflating a dangerous bubble.

The facts behind the headlines

Let’s unpack how MIT arrived at this bold claim. They surveyed about 400 large organizations — mostly Fortune 1000 companies in North America and Western Europe, the so-called technology hubs of the world. Mid-market firms, SMBs, and startups — the actual early adopters of new tech — were conveniently left out of the study.

The timeframe MIT considered for GenAI pilots to show measurable P&L impact was less than six months. (I know people who’ve waited that long just for an offer letter from these companies!) In MIT’s defense, though, LLMs are supposed to be smarter than humans, so maybe they’re expected to generate hundreds of millions in value in a few months.

Their definition of “measurable business value” was also limited to three factors: P&L impact, cost savings, and revenue uplift. Operational improvements, user adoption, and process-time reductions, the leading indicators of transformation, weren’t considered. Anyone who understands business knows these precursors drive long-term returns in cost savings and new revenue later on.

At GoML, we’ve engaged in over 100 Gen AI projects over the last 2.5 years. Our key observation: customers run pilots to experiment and test business hypotheses, not to chase immediate profit. So labeling pilots as “failures” just because they didn’t generate measurable ROI misses the point; many were successful at validating feasibility or technical viability.

From our delivery experience across 100+ pilot engagements, we’ve seen three main types of pilots:

- Exploratory

- Operational

- Commercial

Each is driven by different goals. MIT treating all pilots the same and declaring them a collective failure raises serious questions about that Ivy League reputation.

The study’s timeframe (mid-2024 to early 2025) was also when most businesses were still learning Gen AI and experimenting with use cases. Calling the technology a failure based on that period is like saying “the tea tastes bad” when you never let the water boil.

The most interesting part, though, is that failure data came solely from project sponsors; not technical teams or leadership involved in implementation. It’s almost as if MIT wanted a doom headline.

Whither the learning gap?

According to the report, most failures were due to a “Learning Gap.” MIT claimed that Gen AI systems can’t retain feedback, maintain context between sessions, or adapt to business-specific workflows (not sure if they were testing 2023 LLMs, but let’s roll with it).

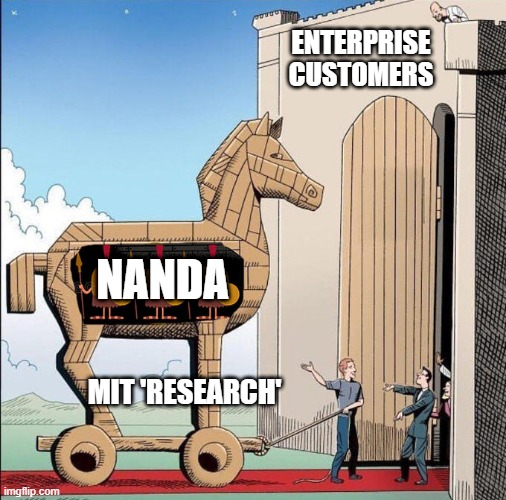

Their proposed solution? NANDA — an infrastructure with rules and protocols enabling AI agents to retain feedback, learn, maintain context, and coordinate across systems. NANDA, they say, will fuel the “Agentic Web,” where AI agents collaborate, share data, and improve over time.

MIT positioned NANDA as the answer to the Gen AI divide, which, conveniently, was the problem they framed the report around.

This circles back to my earlier point about McKinsey. Paint a grim picture, introduce a survey bias, and ta-da! — unveil your branded solution. MIT’s report does the same: highlight the crisis, then push NANDA as the fix. Yes, protocol-level communication between AI agents is critical, but most real-world pilot failures stem from simpler challenges like clinician buy-in, workflow disruption, data quality, and change management. Infrastructure alone won’t fix that.

NANDA seems more like a research infrastructure project — something MIT does exceptionally well — but still far from solving production-scale deployment challenges. No adoption proof, no case studies, no measurable ROI.

Now, let’s place Gen AI realistically within the Technology Adoption Lifecycle (TAL). I like Everett Rogers’ model, later refined by Geoffrey Moore in “Crossing the Chasm,” which maps innovation adoption across five stages:

Innovators → Early Adopters ------Chasm------> Early Majority → Late Majority → Laggards

Most technologies trip at the chasm, failing to prove ROI, alignment, or scalability. Having led over 100 pilot implementations across startups, mid-tier firms, and enterprises in healthcare and fintech, my view is that Gen AI is currently crossing that chasm. We are, in fact, inexorably edging toward the early majority phase.

Gen AI is here to stay

2023–2024: The experimentation phase — limited POCs, feasibility studies, and integration testing. This was when new models were popping up everywhere — some useful, some purely hype.

2024–2025: The adoption phase — organizations began aligning Gen AI with real workflows, building compliance into design, and moving toward scalable deployments. Models are consolidating into production-ready platforms like Amazon Bedrock, Google Vertex, and Azure Studio.

We’re also seeing strong early indicators: budgets shifting from R&D to operations, new Gen AI CoEs forming, and global investments projected to exceed $200 billion by the end of 2025 (Gartner). The real ROI may not yet be fully visible, but operational efficiency, automation, and workflow acceleration are already showing value.

And, not to forget, our own success rate in going from gen AI POC to production for our clients is over 70%. It's far too early to dismiss Gen AI or call it hype.

For organizations aiming to use Gen AI strategically, the next step is clear: move beyond isolated pilots and focus on end-to-end workflow automation. Treat compliance as a wheel, not a bolt-on. Involve business stakeholders from the start, not just at testing.

Gen AI has crossed the innovation hype and is now navigating the chasm. Enterprises are moving from curiosity-driven pilots to production-grade adoption. The winners will be those who focus on operational integration, not experimentation.

.png)

.png)