Machine Learning Operations (MLOps) has evolved from a niche practice to a critical business imperative. As organizations increasingly rely on AI-powered applications for competitive advantage, the need for robust, scalable ML operations has never been more pressing. AI applications across industries, from autonomous vehicles to predictive healthcare and personalized education, are moving from research environments to daily life reliance, making MLOps essential.

The stakes are higher than ever. Companies implementing MLOps best practices report remarkable results: 15-20% optimization in promotional spend budgets, 95% reduction in production downtime, and significantly improved customer retention through targeted campaigns. Yet, many organizations still struggle with model deployment challenges, manual monitoring processes, and governance complexities.

This comprehensive guide presents 10 essential MLOps best practices that will transform your machine learning operations from chaotic experimentation to streamlined production excellence.

Why MLOps excellence matters more than ever in 2025?

The MLOps landscape in 2025 emphasizes implementing monitoring systems for bias, drift, and fairness, while automating compliance reporting. Modern ML systems face unprecedented complexity, with models requiring constant updates to remain relevant and accurate in dynamic business environments.

The cost of poor MLOps practices is significant:

- Deployment bottlenecks: Models trapped in development environments, never reaching production scale

- Manual monitoring overhead: Resource-intensive health checks that drain data science productivity

- Lifecycle management chaos: Inability to update models efficiently, leading to performance degradation

- Governance nightmares: Time-consuming audit processes and compliance challenges

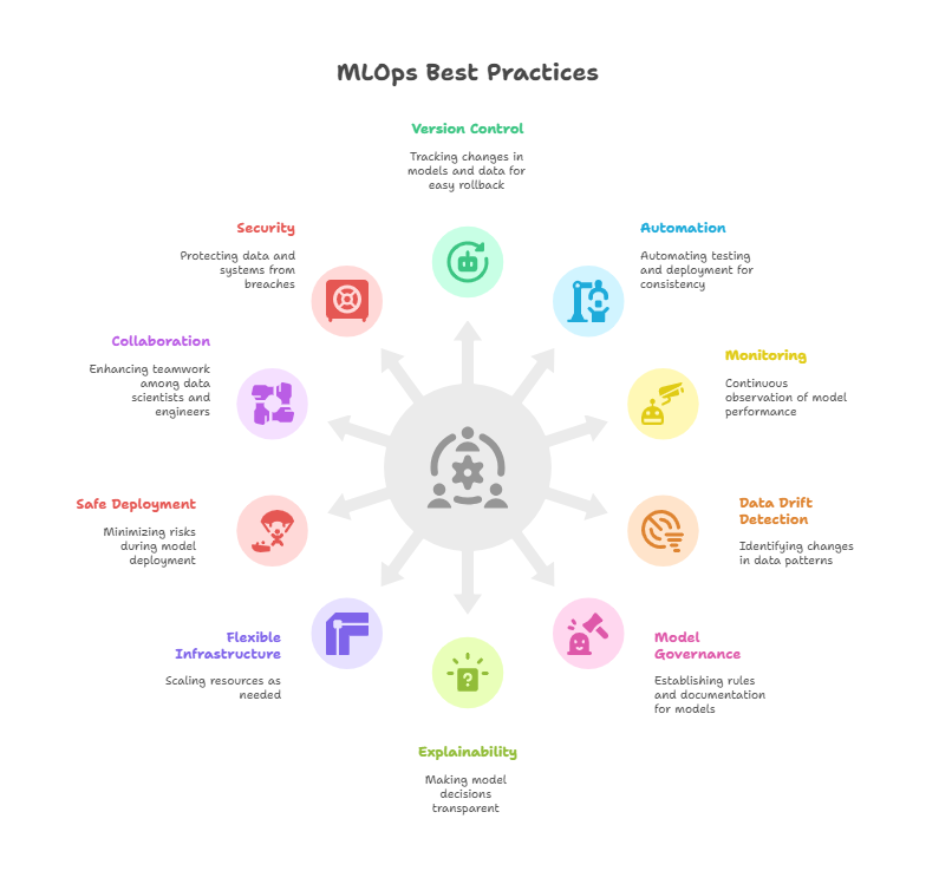

The 10 essential MLOps best practices in 2025

1. Keep track of everything with version control

Think of version control like keeping a detailed diary of your ML project. Just like you save different versions of a document, you need to save different versions of your ML models, data, and code.

What to track:

- Your ML models and their settings

- Training data and how you process it

- Code changes and configurations

- Experiment results and notes

Why it matters: When something goes wrong (and it will), you can quickly go back to a working version. It's like having a time machine for your ML projects.

2. Automate your testing and deployment

Imagine having a robot assistant that tests your ML models and deploys them automatically when they're ready. That's what CI/CD (Continuous Integration/Continuous Deployment) does for MLOps best practices.

What to automate:

- Check if your data looks correct

- Test if your model works as expected

- Verify your model isn't biased against certain groups

- Deploy your model when all tests pass

Why it matters: Manual testing takes forever and people make mistakes. Automation ensures consistency and saves time.

3. Watch your models

Once your model is live, you need to monitor it constantly. Think of it like being a security guard watching security cameras, you need to spot problems before they become disasters.

What to monitor:

- How accurate your model is over time

- How fast it responds to requests

- Whether the input data looks different than expected

- How much money it's costing to run

Why it matters: Models can break silently. Your accuracy might drop, but no error messages appear. Only monitoring will catch these issues.

4. Detect when your data changes (data drift)

Data drift is like when your favorite restaurant changes its recipe, everything looks the same, but the taste is different. In ML, this happens when your input data changes over time.

What to watch for:

- New types of data you've never seen before

- Changes in data patterns (like customers suddenly buying different products)

- Missing data fields that were there before

- Statistical changes in your data distribution

Why it matters: When data changes, your model's performance drops. You need to catch this early and retrain your model.

5. Create rules and documentation (model governance)

Think of governance like having a rulebook for your ML models. Just like a company has policies for employees, you need policies for your models.

What to document:

- What your model is supposed to do

- What data it uses and where it comes from

- Who approved it for production use

- What limitations and risks does it have

Why it matters: For legal compliance, debugging issues, and helping new team members understand your models.

6. Make your models explainable

Your model should be able to "explain" why it made a decision. It's like asking, "Why did you recommend this movie to me?" and getting a clear answer.

What to explain:

- Which features most influenced a prediction

- Why certain decisions were made

- How confident the model is about its predictions

- What happens if you change certain inputs

Why it matters: For debugging, building trust with users, and meeting regulatory requirements.

7. Build flexible infrastructure that grows with you

Your ML infrastructure should be like a rubber band, able to stretch when you need more power and shrink when you don't.

Key components:

- Cloud services that automatically scale up and down

- Containers (like Docker) that package everything neatly

- Load balancers that distribute work evenly

- Backup systems in case something fails

Why it matters: You don't want to pay for unused resources, but you also don't want your system to crash when traffic increases.

8. Deploy safely with smart strategies

Don't just replace your old model with a new one overnight. Use smart deployment strategies that minimize risk.

Safe deployment methods:

- Blue-Green: Keep two identical systems, switch traffic from old to new instantly if needed

- Canary: Send just 5% of traffic to the new model first, then gradually increase

- A/B testing: Compare old vs new model performance with real users

Why it matters: If your new model has problems, you can quickly switch back without affecting all your users.

9. Get everyone working together

MLOps best practices work best when data scientists, engineers, and business people collaborate effectively. It's like a sports team, everyone has different skills but works toward the same goal.

How to collaborate:

- Regular meetings where everyone shares updates

- Shared tools that everyone can access

- Clear definitions of who does what

- Documentation that non-technical people can understand

Why it matters: Most ML projects fail due to communication problems, not technical issues.

10. Keep everything secure and private

Treat your ML systems like a bank vault, multiple layers of security protecting valuable assets.

Security essentials:

- Encrypt all data (like putting it in a locked box)

- Control who can access what

- Monitor for unusual activity

- Regular security updates and patches

- Backup everything important

Why it matters: Data breaches are expensive and damage your reputation. Privacy regulations like GDPR require strong security.

How to start with MLOps?

Implementing these 10 MLOps best practices will help you create reliable ML and AI systems that deliver real business value. Companies following these MLOps best practices see dramatic improvements: better efficiency, lower costs, happier customers, and faster innovation.

- Assess where you are now across these 10 areas

- Pick 2-3 areas to focus on first (we recommend starting with version control and monitoring)

- Build up your capabilities gradually

- Measure your progress and celebrate wins

Remember, MLOps best practices are not a one-time project, they're an ongoing journey of continuous improvement.

How to measure the success of your MLOps best practices?

Track these simple metrics to see if your MLOps best practices are working:

Speed metrics

- How long it takes to deploy a new model (goal: under 1 week)

- How quickly you find problems (goal: within 1 hour)

- How fast you fix issues (goal: within 4 hours)

Quality metrics

- Model accuracy over time (should stay stable or improve)

- Response time for predictions (should be fast and consistent)

- Cost per prediction (should decrease or stay stable)

Business metrics

- Revenue impact from ML improvements

- Customer satisfaction with AI-powered features

- Time saved through automation

What are the emerging best practices trends in MLOps?

Here are the trends that we expect will shape MLOps best practices in 2025 and beyond:

- Easier tools for everyone: New platforms are making MLOps best practices accessible to people without deep technical knowledge.

- Edge AI: More models will run on phones, cars, and IoT devices, requiring new optimization techniques.

- Automatic compliance: Tools that automatically generate reports for legal and regulatory requirements.

- Green AI: Focus on reducing energy consumption and environmental impact of ML systems.

Looking to harness the full potential of AWS's AI and ML ecosystem for your enterprise?

GoML is a leading Gen AI development company with deep expertise in SageMaker, Bedrock AgentCore, Nova Act SDK, and Strands SDK. We help you build sophisticated AI agents faster, deploy them safely across your organization, and scale seamlessly as your needs grow. Reach out to us today.