In enterprise AI, complexity is a tax. Scaling initiatives often expose gaps like tools that don’t integrate, models that are hard to operationalize and teams stretched thin on expertise. Add shifting regulations and rising security demands and the risks multiply fast.

Amazon Bedrock cuts through that friction, giving enterprises a secure, compliant and scalable foundation to run AI at production-grade, without overhauling existing systems or reinventing the stack.

The Amazon Bedrock AI advantage

Amazon Bedrock AI is the go-to platform on AWS for building and scaling custom generative AI applications using leading foundation models from AI innovators like Anthropic, Meta and Amazon itself. With managed services, robust security and deep integrations with AWS resources, Bedrock helps enterprises move faster, safeguard sensitive data, and meet strict compliance demands, all while reducing the operational burden of managing AI infrastructure.

For enterprise leaders, the pain point is real: How do you deliver advanced AI capabilities-like chatbots, document intelligence, or fraud detection-without running into regulatory headaches or endless cloud cost overruns? With Bedrock AI, there’s no need to build everything from scratch. Teams can use Bedrock’s built-in safeguards, monitoring, and automation, while tapping into best-in-class models and developer tools, accelerating both experimentation and production deployment.

It gives teams a straightforward way to use top-tier models, keep data safe, and scale across workloads. The goal is clear. Make AI practical for real business outcomes without heavy lifting.

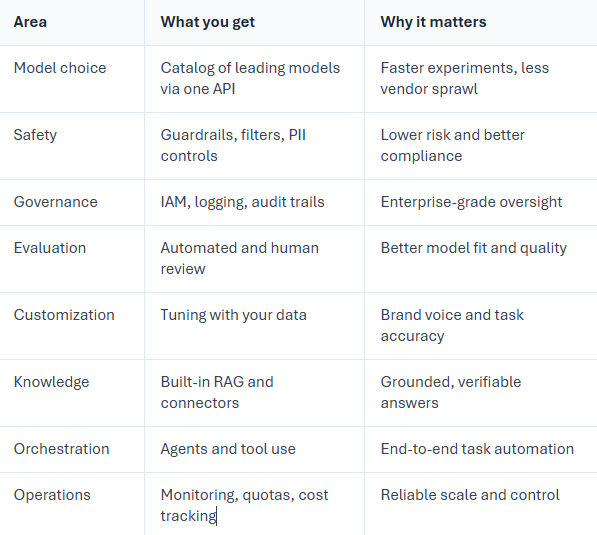

This guide explains how Amazon Bedrock AI helps leaders, architects, and builders ship with confidence. It covers model access, ease of use, safety, governance, monitoring, customization, orchestration, pricing, and more. It also shows where GoML fits in as your partner for fast, secure value creation.

Why choose Amazon Bedrock for enterprise AI?

- Choice with a single interface: Pick from leading text, vision, and code models inside one service. Use one API and console rather than stitching many vendors together.

- Simple abstraction layer: Swap or mix models without rewriting your app. Match the task to the model that performs best.

- Managed scale and security: Run on AWS with private networking, encryption, and tight identity controls. Keep sensitive data under your control.

- Built-in safety and governance: Apply guardrails and content filters. Track usage and access for audits.

- Native AWS integration: Connect to storage, data, security, monitoring, and MLOps tools you already use.

Bedrock offers the best model access and ease of use

- Catalog of leading models: Browse models for tasks like summarization, Q&A, content generation, code help, image understanding, and more.

- Single API surface: Call different models using a consistent pattern. Reduce glue code and speed up prototyping.

- Fast onboarding: Start in the console or drop in SDK calls. No custom infrastructure needed.

- Access control with IAM: Grant least-privilege access by user, team, or workload. Map permissions to your organization’s structure.

- Private connectivity: Use VPC endpoints so traffic stays on the AWS network. Protect workloads from the public internet.

Safety, guardrails, and explainability

- Policy-driven guardrails: Set rules that block topics, mask PII, and filter harmful output. Apply consistent controls across models.

- Content moderation: Use built-in filters to reduce unsafe or off-policy responses.

- Prompt and output controls: Standardize prompts, enforce review steps, and log interactions for oversight.

- Audit and lineage: Capture who ran which model, with what configuration, and when. Support internal and external audits.

- Transparent operations: Pair traceable logs with clear rules so teams can review decisions in context.

Evaluation, monitoring and governance

- Model evaluation: Run automated checks and human review workflows to compare models on your data and criteria. Pick the best model for each use case.

- Quality tracking: Measure accuracy, helpfulness, latency, and cost. Keep a scorecard per use case and iterate.

- Operational monitoring: Use CloudWatch and related AWS tools to monitor throughput, errors, and performance.

- Change management: Version prompts, templates, and agents. Promote changes through dev, test, and prod with approvals.

Customization and fine-tuning

- Lightweight tuning: Improve a model on your data using safe, guided workflows. Tailor tone, vocabulary, and task behavior.

- Parameter-efficient options: Reduce cost and time by tuning only what is needed. Keep the process accessible to most teams.

- Data controls: Keep training and evaluation data private. Manage keys and encryption with AWS services.

- Reusable assets: Store tuned models, prompts, and templates so other teams can reuse what works.

Knowledge bases and retrieval

- RAG made simple: Create a knowledge base that indexes your documents and content. Give the model context at query time for factual answers.

- Flexible connectors: Ingest from common AWS data stores and vector databases. Start with S3 and grow as needed.

- Freshness by design: Keep indexes current with scheduled sync. Reduce the risk of stale answers.

- Grounded responses: Cite sources and improve trust for users and stakeholders.

Agents and orchestration

- Agents for Amazon Bedrock: Build agents that can read instructions, use tools, call APIs, and take multi-step actions.

- Tool use and function calling: Define action groups that map to your services. Let the agent plan and execute tasks end to end.

- Workflow patterns: Cover tasks like order status, returns, appointment booking, knowledge lookup, and system updates.

- Policy and safety layers: Apply the same guardrails and identity controls to every step.

Deployment options and quotas

- On-demand inference: Pay for what you use. Ideal for pilots, spiky traffic, and early experiments.

- Provisioned throughput: Reserve capacity for steady, high-volume workloads. Gain predictable performance.

- Quotas and limits: Each account and Region has defaults that you can raise through requests. Plan capacity during rollouts.

- Cost visibility: Track token use and runtime metrics. Set budgets and alerts to prevent surprises.

Common enterprise use cases built on Bedrock

- Customer support copilots that answer questions and escalate with context.

- Sales enablement assistants that draft outreach and summarize research.

- Knowledge search that cites trusted documents and policies.

- Document processing for contracts, RFPs, and onboarding packs.

- Software help for code suggestions, reviews, and runbook guidance.

- Marketing content generation with tone and brand guardrails.

What are some real-world AI implementations on Amazon Bedrock?

Finance

Financial firms depend on AI to power fraud detection, transaction monitoring, and smart underwriting, but the hurdles are significant: there are non-stop audit trails, complex rules, and zero tolerance for downtime. A recent GoML project showcases the practical value of Bedrock in this landscape. GoML built a real-time transaction monitoring engine for Miden, using Amazon Bedrock AI to detect anomalies as they happened. The system helped reduce fraud risk, cut response times, and-crucially-remained fully auditable and compliant with internal risk management policies.

Another example comes from Ledgebrook. GoML developed a conversational AI chatbot for Ledgebrook’s underwriting operations, leveraging Bedrock to allow underwriters to instantly query policies, files, or claims. This solution led to a 70% reduction in document retrieval time and automated policy classification with over 90% accuracy, minimizing manual errors while satisfying strict audit and compliance requirements-a win-win for business users and compliance teams alike.

Healthcare

Healthcare isn’t just about managing clinical records anymore-it's about delivering better care, faster, with full compliance. GoML’s collaboration with Max Healthcare is a case in point. Here, GoML built an AI copilot leveraging Amazon Bedrock AI that lets clinicians query longitudinal patient data using natural language. Instead of wading through stacks of records or pinging multiple departments, doctors get real-time patient summaries and decision support simply by asking questions in plain English. The impact? Faster clinical decision-making, enhanced accuracy, and strict HIPAA-compliant handling of sensitive health data. The infrastructure provided by Bedrock makes it both scalable and secure, relieving healthcare providers from the operational pain of managing their own underlying AI infrastructure.

Life Sciences and Pharma

Pharma and life sciences organizations face some of the most demanding regulatory and audit scenarios anywhere in business. Automation is essential, but only if it can meet the letter of the law when it comes to traceability, documentation, and data privacy. GoML’s work with SagaxTeam, built on AWS and powered by Amazon Bedrock AI, highlights how the stakes are rising-and how the right AI stack delivers results. The solution was a pharma compliance agent designed to automate audit preparation and review. Not only did this system deliver 80% faster audit cycles and 70% automation of prep tasks, it also reduced documentation errors by 50%. All processing, classification, and storage happened securely within the boundaries of the AWS cloud, making both compliance officers and audit teams confident in the system’s reliability and security.

Why choosing Bedrock AI is strategically correct?

For many large organizations, the barriers to AI adoption aren’t lack of ambition-they’re complexity, security, and compliance. Amazon Bedrock AI removes much of that friction. Enterprises can innovate without worrying if their data is leaking into public models or if their workloads will suddenly incur unexpected costs. Bedrock’s orchestration and monitoring options, plus seamless integration with AWS security controls, mean less time spent fighting fires and more time delivering value.

What stands out is how Bedrock gives business and technical teams the confidence to move forward. Whether it's building intelligent agents, deploying compliance automation, or turning data into actionable insights, Bedrock AI provides a grounded, stable set of tools that let enterprise teams focus on outcomes.

How GoML helps you win with Amazon Bedrock AI

Getting from pilot to production takes more than APIs. You need the right architecture, data pipelines, safety rules, and cost controls. You also need a rollout plan that fits your teams and risk profile.

GoML is a leading Gen AI development company that is also an official AWS Gen AI Competency Partner and AWS Machine Learning Partner. Our team helps you:

- Plan the right architecture: Set up secure landing zones, VPC endpoints, identity, and network paths.

- Operationalize RAG and agents: Build knowledge bases with clean ingestion and testable prompts. Wire up agents to your systems safely.

- Tune for quality and cost: Run model evaluations on your data, select the right models, and set budgets that hold up in production.

- Harden safety and governance: Apply guardrails, implement review loops, and automate change management across environments.

- Ship fast with confidence: Move from proof of concept to a stable rollout using proven patterns.

What are the next steps to get started?

- Pick one use case that has clear value and a narrow scope.

- Test two or three models in Amazon Bedrock AI and run a simple evaluation.

- Add guardrails and a knowledge base if the use case needs facts.

- Decide on on-demand or provisioned throughput based on expected load.

- Roll out to a small group, monitor quality and cost, and scale in phases.

Amazon Bedrock AI gives enterprises a practical path to deploy AI at scale. You get choice, safety, governance, and strong integration with the AWS stack. Teams can experiment fast, control risk, and move successful ideas into production. GoML helps you design the right architecture, tune quality, and deliver results that last. Start small, measure outcomes, and grow with a platform that supports your long-term strategy.

This article is part of our comprehensive guide to AWS AI. Explore the guide to know more about AWS AI tools like Bedrock and SageMaker, AWS AI LLMs like Nova, and why AWS AI infrastructure is the best way to build gen AI based solutions that can scale in production.